YouTube recommends more election fraud videos to skeptics, NYU study finds

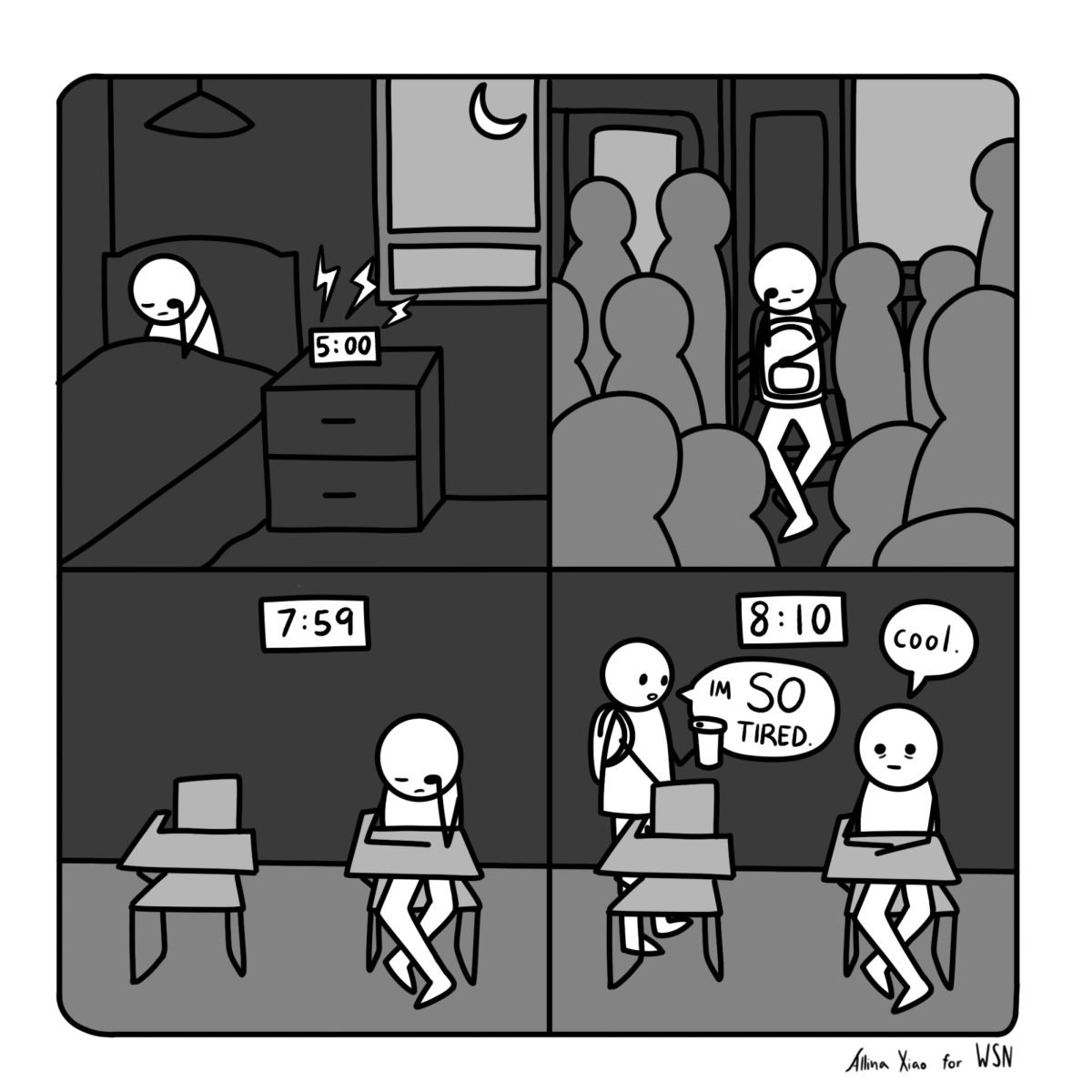

The YouTube algorithm is more likely to recommend election fraud videos to individuals who are already doubtful about election legitimacy.

September 24, 2022

A study from NYU’s Center for Social Media and Politics found that YouTube’s algorithm recommended election fraud-related videos about three times more often to individuals who were already skeptical of the 2020 presidential election than to those who were not.

Out of about 400 recommendations, the study found that an average of eight more election fraud videos were shown to the most skeptical viewers relative to non-skeptical viewers.

James Bisbee, the project’s lead researcher, said that the study was done during the 2020 presidential election, when there was heightened public concern about social media algorithms encouraging radical political beliefs. Motivated by the lack of evidence they found to support these claims, Bisbee and his team asked study participants to rate their concerns about election fraud from zero to 100 and compared their responses to their YouTube recommendations.

The results showed the tendency of the algorithm to recommend videos about election fraud to skeptics, although the study authors said that suggested videos did not always encourage election-related conspiracy theories.

“I don’t think it means that these people were being radicalized by the recommendation,” Bisbee said. “I don’t think the people who went to Jan. 6 could have made that choice as a function of eight more recommendations. But I do think it’s evidence of the independent influence of the algorithm.”

Suggested content included videos that were for, against and neutral about claims of election fraud. The study authors caution against over interpretation — the highest number of election fraud-related videos amounted to only 12 of about 410 total recommendations.

“We’ve had some other press coverage from NBC and the Verge and what not, and I found on Twitter, one or two people only reading the headline, and then saying, YouTube caused Jan. 6,” Bisbee said. “That is not at all consistent with what we have or what our findings suggest. In the same way that we need to combat misinformation by reading carefully, I would encourage people who read my paper to read it carefully.”

Bisbee said that his team is now conducting follow up studies. They will examine how YouTube’s algorithm reacts to and influences broad political leanings, as well as how it interacts with specific political issues.

Contact Nicole Lu at [email protected].