“This set of lecture notes was improved using the ChatGPT editing function.”

For as long as I have been at NYU, students and faculty have debated the ethics of artificial intelligence and its impact in the classroom. However, it was only this fall that I saw a professor — or anybody for that matter — openly acknowledge they used AI. What further caught me off guard was the explicit permission to use AI featured in the syllabus. This experience made me reflect on the state of AI at NYU — most classes tend to speak on it in some way or another, yet there is a clear lack of coherent policies guiding its usage. In light of such ambiguity, the university needs to reframe its policy to focus on teaching AI literacy, as opposed to fully dismissing it as a cheating tool for those who wish to cut corners.

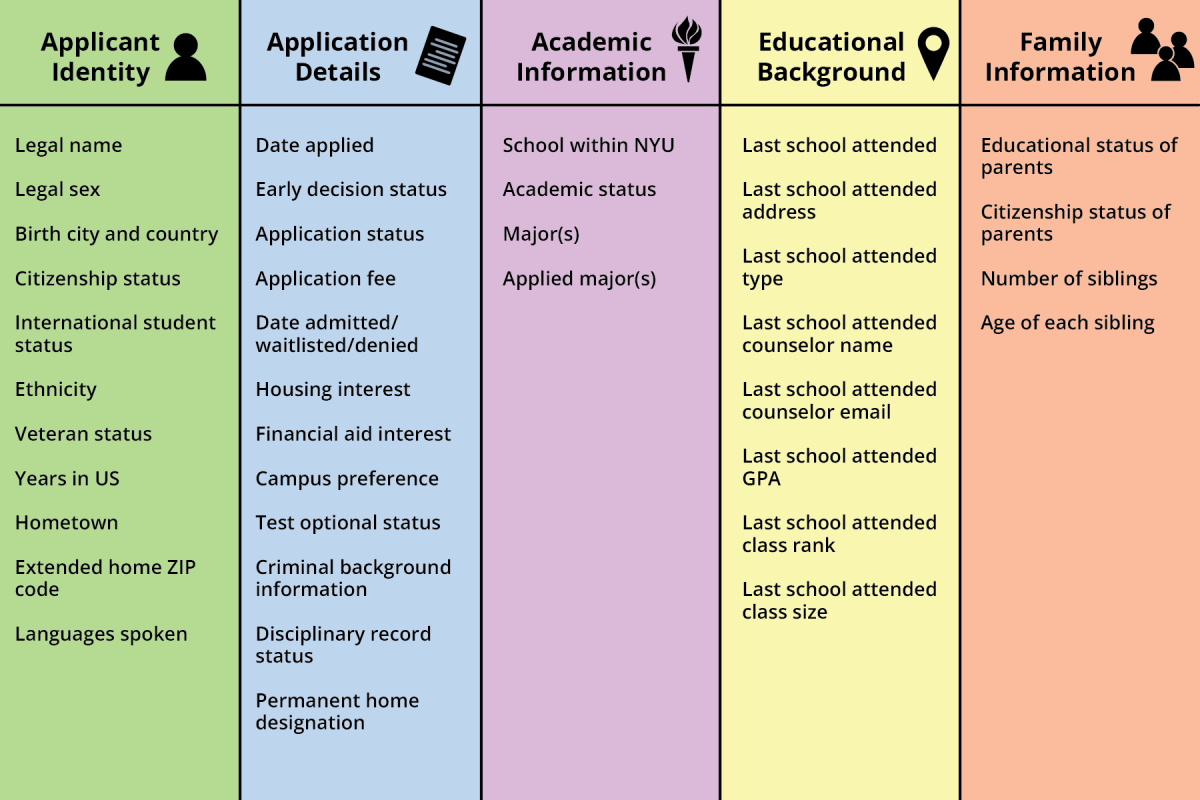

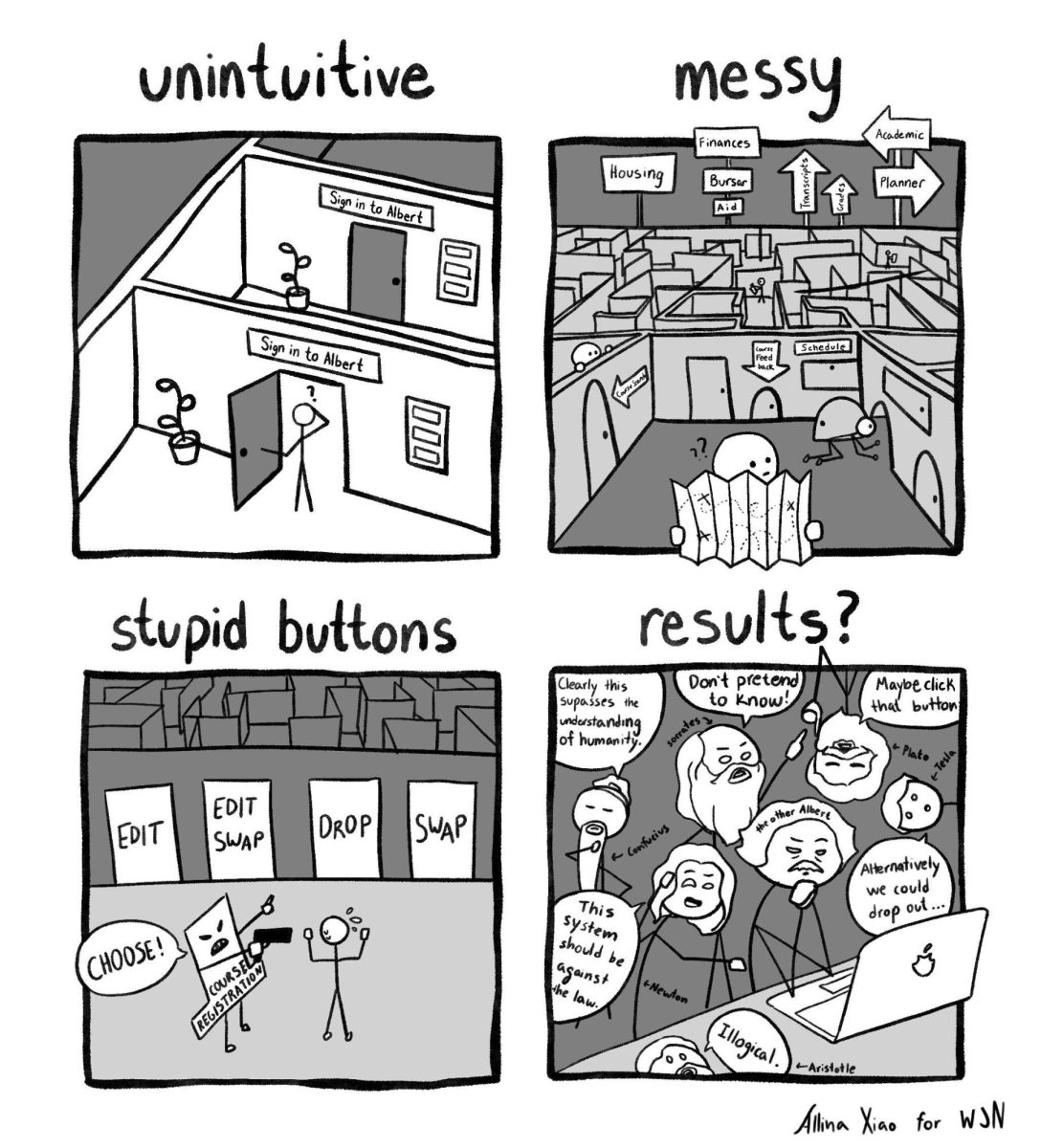

Policies concerning academic integrity, which typically detail how cheating and plagiarism are prohibited and punishable offenses, are consistently featured on any class syllabus. In general, presenting work without acknowledging the source, including AI, is considered a form of plagiarism at NYU. However, there is no consistent policy on AI usage. The university does, however, suggest three potential strategies for the handling of the technology in class: integrating, avoiding or forbidding it in day-to-day classwork.

Whether AI should be used in class, and to what extent, is dependent upon individual faculty member decisions. For those wishing to integrate AI in the classroom, the Provost’s office advises professors to include reasons for allowing AI in class syllabi, the do’s and don’ts as well as the explanations of the tools’ limits. Others may choose to either continuously adjust assignments to make them unsolvable with AI or simply forbid and pretend ChatGPT does not exist.

What we see in classrooms today is a patchwork of different AI rules, indicating just how lost the university is in understanding and reaping the benefits of the technology. NYU’s policy has unfairly shifted the responsibility of combating plagiarism and cheating — along with teaching a new skill set — onto faculty, instead of providing a set of clear instructions and expectations to staff and students.

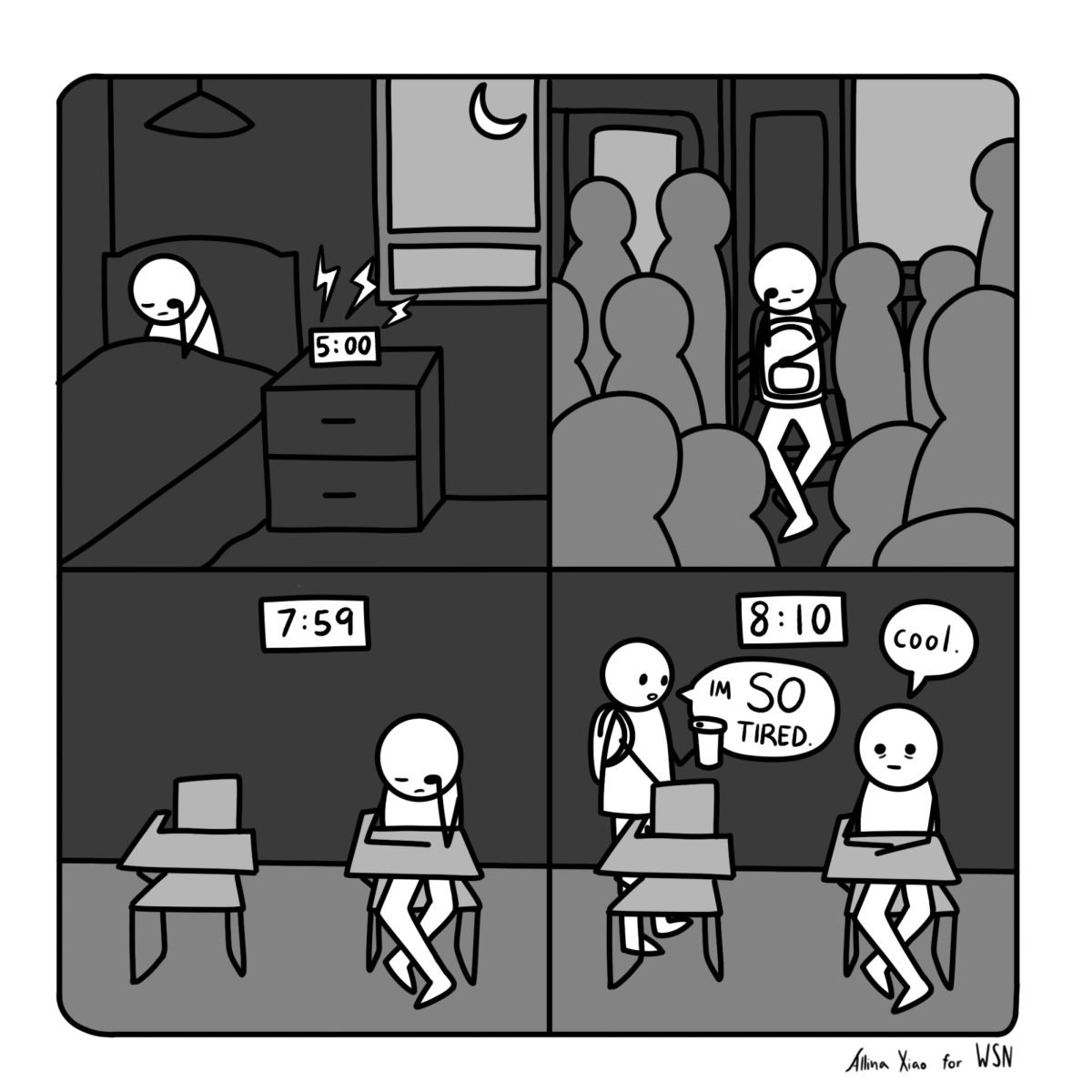

Navigating the murky waters of generative AI has made most professors apprehensive toward the technology, oftentimes warning students of terrible consequences for those who use it or fully avoiding mentioning the subject in their syllabi. Even for the classes that don’t outright ban its use, students are rarely provided clear instructions or given the class time to workshop permitted usage. These guidelines cannot be found outside of the classroom either, as there are no resources for students to learn how to utilize AI in a critical, fact-checked and properly cited manner. Instead, the moral and academic responsibility of proper AI use is left to the students to figure out, creating unnecessary anxiety about using the tool. This is nearly impossible, as the rules are almost always varying or even contradictory between different classes.

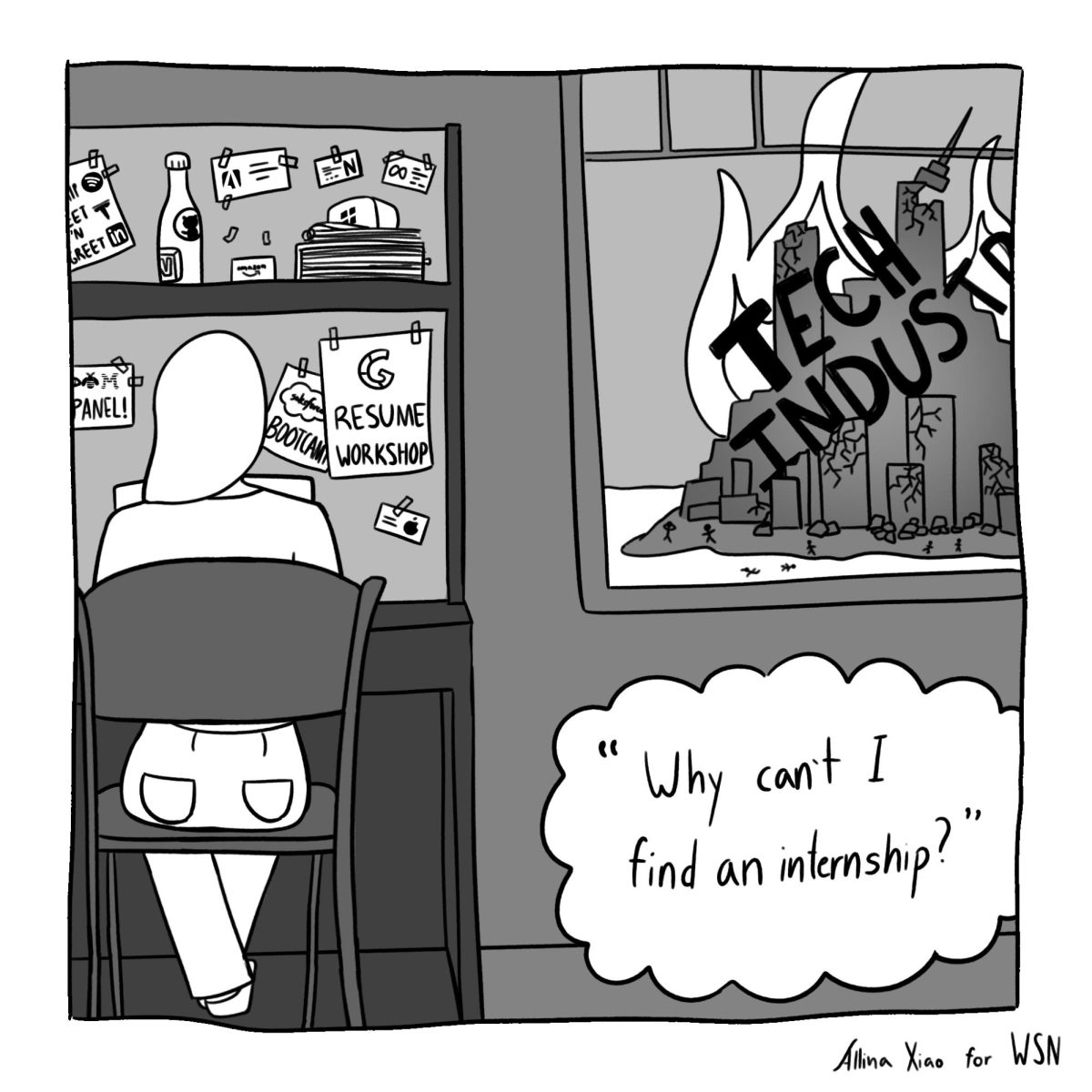

The place of AI at NYU is nothing short of ambiguous. For some students and professors, AI has already been fully integrated into their academics, with ChatGPT acting as a search engine, editor, idea generator or even an assignment solver. Others are behind the times, fearing the technology’s power and possible repercussions. But one thing remains clear — the use of AI isn’t going anywhere. The university has already said that “there is no longer any obvious line dividing acceptable from unacceptable [AI] use.”

The administration needs to embrace the fact that the only way forward is to implement guided AI utility through clear direction and guidance. Forbidding or avoiding the use of AI will not stop students from improperly using the tool to help with their school load. However, the university can guide students toward ethical AI use, such as creating study guides, organizing notes and giving background information about a topic. These are all ways students can use AI as a tool to supplement their growth and learning — not as a method to cheat. It seems certain that the world into which we graduate will be one where AI is ever-present and unavoidable. Without a clear AI policy, students are not given the freedom to test the limits and possibilities of this technology.

WSN’s Opinion section strives to publish ideas worth discussing. The views presented in the Opinion section are solely the views of the writer.

Contact Andreja Zivkovic at [email protected].

Clay ShiRKY • Oct 10, 2024 at 4:41 pm

Andreja, thank you for this thoughtful piece. I share your concern with increasing clarity of AI use at NYU, but even given that goal, there will never be much in the way of standardized policy.

I am the Vice Provost for AI and Technology in Education at NYU, which fancy title just means that whenever anything digital touches the classroom, my job is to help people adapt. In the case of AI, that means navigating the murky waters you describe.

You write “The place of AI at NYU is nothing short of ambiguous.” This is true, but that is because the place of AI _everywhere_ is nothing short of ambiguous.

No industry or field has arrived at a commonplace integration of AI capabilities with human practices. The breathless reports of immanent change that we saw last year have given way to more sober-minded assessments of the difficulties in integrating tools this complex and unpredictable. As we often say in the Provost’s office, these tools are going to transform higher education, but that transformation is going to take forty semesters, not four.

The reason there is no uniform guidance to faculty and students at NYU is not from lack of trying, but from competing commitments. We hear from computer science instructors that AI use will be required of their students when they graduate, so they regard integrating it into their students’ work as imperative. We hear from screenwriting instructors that AI use is forbidden by contract in the union those students aspire to join after graduation, so they regard banning it from their studetns’ work as imperative.

Little I could say as an administrator could cover both cases.

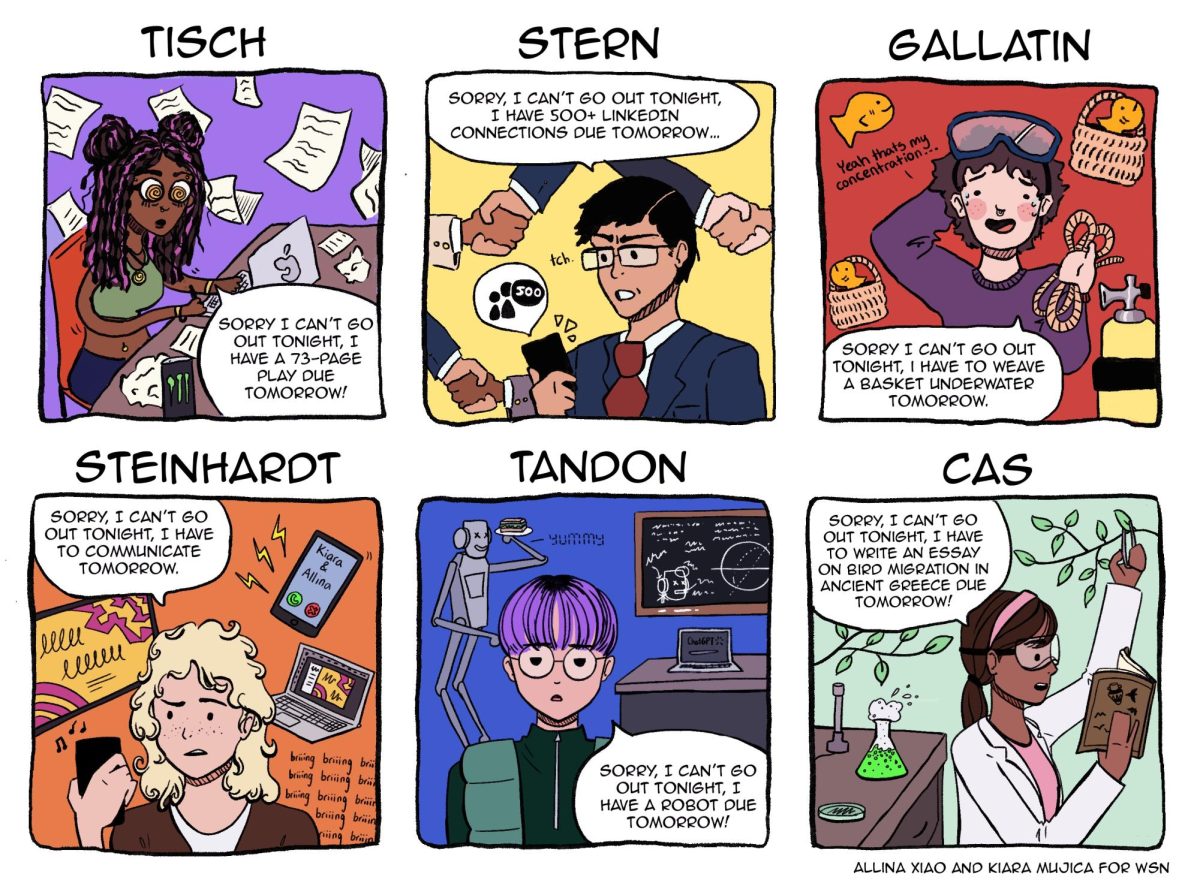

The gift and curse of NYU is variety. We have 600 different kinds of degrees, and every year we see students who become project managers or philosophers, actors or accountants, chemists or choreographers. Within those departments, faculty have a wide range of preferences for AI use, and within their courses there will be assignments that are more or less amenable to AI use, and among the students, there will be a range of strategies. None of us can specify policies that will fit all of those contexts.

Our goal is to aid faculty and student judgement about AI, not to replace it. We can do a better job helping both faculty and students navigate the welter of options, and we are painfully aware that there is still too much confusion and too little conversation in individual classes. Every semester, we work to offer more guidance and more material designed to help that conversation. But even as we improve, we will never converge on a uniform approach, because NYU is too varied.