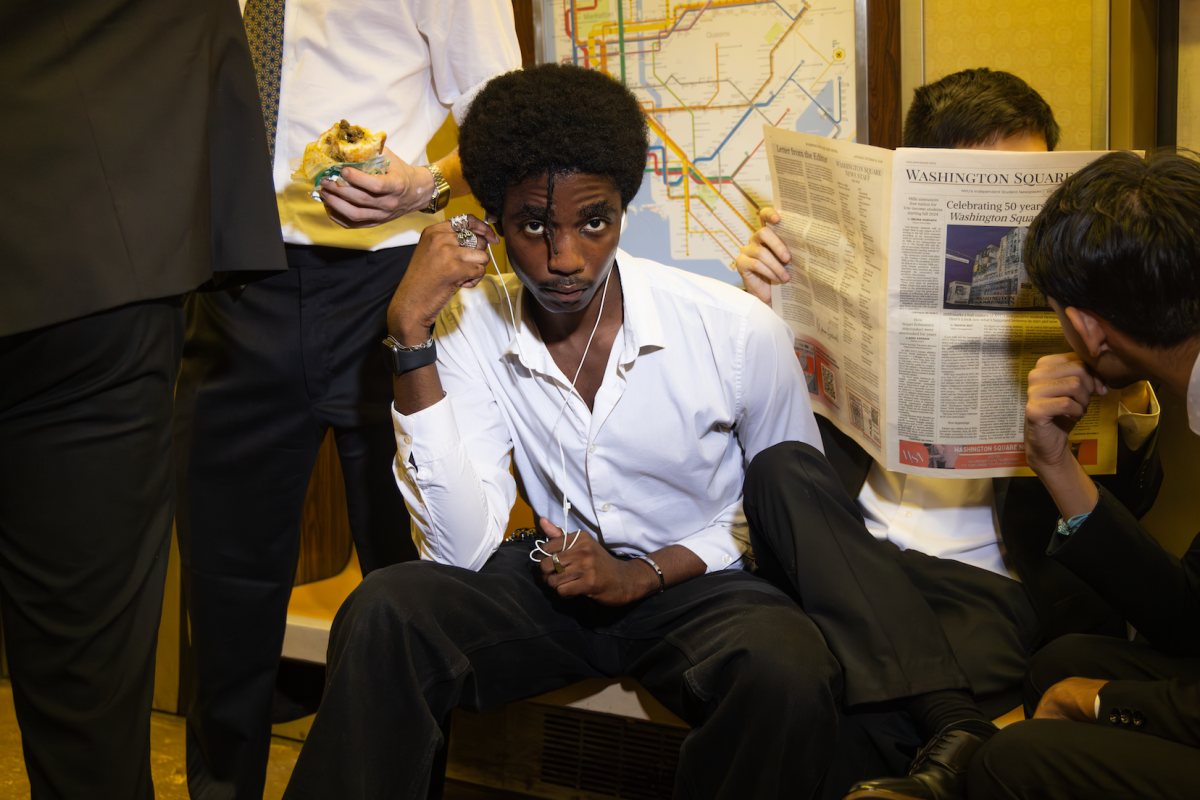

NYU students may think of their iPhones when they hear the phrase “facial recognition,” but a panel of experts talked about its potential positive effects in combating terrorism, and negative effects on issues of privacy and human rights.

Cameras are now being used to monitor the movement of terrorist groups — they are also used to oppress minority groups.

Professionals in the field of counterterrorism spoke at the Brennan Center for Justice on Tuesday, warning about the human rights implications of biometric tools such as facial recognition, saying the tools could lead to racial profiling and discrimination.

The use of biometric tools — which collect data through face, fingerprint, movement and iris scans — to identify terrorist groups and enforce law has a spike in usage by governments worldwide, including the United States. Biometric data has been used to track patterns of terrorist groups like the Islamic State of Iraq and the Levant, tracking them when they return to their countries of origin before heading to conflict zones, according to the panel. Sometimes the data can be used for less specific roles, like monitoring migration patterns of refugees and for forensic use.

As of now, there are no clear laws regarding the ethics and regulation of data collection for law enforcement, leading to some raising concerns over potential human rights issues.

Uighur Muslims under China’s high-tech surveillance system are actively profiled and oppressed. The system collects data through facial recognition, fingerprint scanning and iris recognition to actively profile the racial minority and place them into concentration camps across the Xinjiang region of China.

“It is clear to see that the technological advancements in identification, such as facial recognition, are ahead of the legislation,” Head of Advocacy and Policy Team at Privacy International Tomaso Falchetta said. “Who has access to these large data sets? Should all this data even exist? This field of biometrics is growing so fast and it has the ability to be implemented in so many fields yet few are concerned with regulating it.”

The United Nations Security Council has required States in Resolution 2396 to “develop and implement systems to collect biometric data” and calls for enhanced information-sharing, though few regulations have been put in place.

“Countries barely have proper regulations on regular data collection, let alone data collection and its use for law enforcement,” Advisor to the U.N. Special Rapporteur on Counter-terrorism and Human Rights Krisztina Huszti-Orban said. “We have seen these issues with very real world impacts for the past decade, but most prominently in the past five years.”

Besides China, Israel has used biometrics to monitor Palestinians, often placing Palestinians (many of whom are civilians) on lists that restrict their movement without charging them with any crimes in particular.

Counsel on the Liberty & National Security Program at the Brennan Center for Justice Ángel Díaz brought up issues with biometrics in the private sector as well. For instance, Tesla was very close to going to market with their line of self driving cars. Late in the process, developers found that the car algorithm did not recognize bikes.

Díaz said that algorithms are often flawed, negatively affecting certain communities.

“But it transcends just bikes,” Díaz said. “Instead of bikes it can be people; people with disability or people of color. There is no unbiased algorithm because all algorithms are made by humans.”

Algorithms and artificial intelligence have come under fire for reinforcing discriminatory practices. From a resume-scanning AI that deducts from its rating when the word “woman” is included to crime predictors disproportionately targeting people of color, scientists continue to struggle to tackle this issue.

New developments in biometrics have even included emoting and aggression recognition that panelists said are likely to be used by law enforcement soon.

“The developers of these technologies may not be racist, but the reality is they can reflect their bias unintentionally into their work,” Huszti-Orban said. “This means there are people who are disproportionately likely to be jailed like people of color, refugees and other at risk groups.”

NYU Law student Esperanza López attended the event and said it was an example of interdisciplinary collaboration.

“This is why events like these are so important,” Lopez said. “It’s great to see people who are in the tech field ask for feedback from people in academia law, engaging with those in civil society and experts in human rights.”

Email Mina Mohammadi at [email protected].