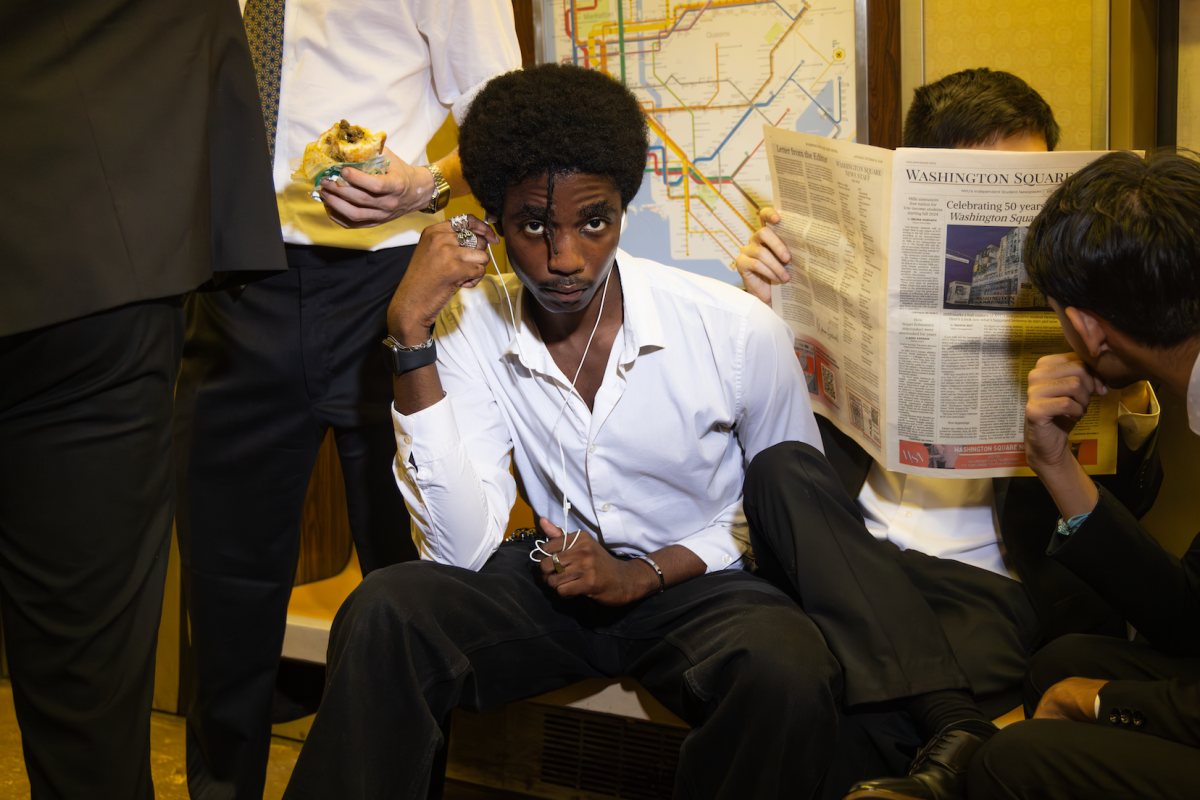

Artificial intelligence tools created by leading technology companies have come under fire in recent years for discriminating against women, people of color and other minorities.

Last year, Reuters reported that Amazon discontinued a resume-scanning AI tool that was found to penalize resumes with the word “women” on it. Algorithms that are used in courtrooms to predict whether a defendant will engage in future crimes are biased against African Americans. Facial recognition tools are notoriously problematic: in 2015, Google Photos miscategorized a black person’s face as a gorilla, Uber’s face recognition verification tool doesn’t work for some transgender drivers and one study found that self-driving cars may be more likely to hit pedestrians of color.

Researchers from NYU’s AI Now Institute have linked these biased algorithms to the lack of diversity in the AI industry, according to a report released last week.

Postdoctoral researcher Sarah Myers West, one of the study’s authors, said to WSN that these phenomena cannot be viewed separately.

“What we traced out is that these two things are interrelated,” West said. “And in addressing them, we need to sort of get into that interstitial space between them, and really understand this feedback loop that is produced by discriminatory workplaces and discriminatory tools.”

The researchers argue that these algorithms are biased because the people creating them are mostly white and male. In the study, the researchers noted that 80% of AI professors are men and that women have authored only 18% of peer-reviewed publications at leading AI conferences in recent years.

The researchers also wrote that some AI tools shouldn’t exist at all, like algorithms that claim to identify a person’s sexuality or predict criminality based on a headshot.

“This is the reemergence of biological determinism, which is a really flawed approach to science, and one that’s been largely debunked,” West said. “And so I think there’s a real concern about whether we should be building systems based on these approaches at all.”

One of the founders of the institute and authors of the study, Meredith Whittaker, is an AI researcher at Google who was one of seven employees that organized a 20,000 person walkout over how the company handles sexual harassment in November. After organizing the walkout, Whittaker experienced retaliation from Google and was recently told to leave her job at NYU’s institute.

“It’s about silencing dissent and making us afraid to speak honestly about tech and power,” Whittaker said via Twitter.

At Facebook, women comprise 15% of AI researchers. At Google, it’s 10%. The group also found that less than 5% of Google, Facebook and Microsoft’s workforces are black, compared to over 13% of the United States as a whole.

The researchers also note that a lack of diversity in academia is problematic to the future of AI. In 2015, only 18% of computer science majors were women at U.S. universities, a decline from a high of 37% in 1984. According to university spokesperson John Beckman, women comprise 32% of computer science majors at NYU. However, the researchers note that attempts to fix the pipeline problem — the theory that men are hired at disproportionate rates because there aren’t enough women to hire — fail to address the systemic imbalances of power in the workplace.

Stern first-year Sara Liu, who co-founded an AI tool for insurance agents, said she found some of the researchers’ recommendations to be too aspirational for small startups like her own.

“I feel like a lot of what they propose [is] just very theoretical,” Liu said. “It’s not really practical, especially for smaller sized companies, where they need to find enough talent before they think about diversity.”

Gallatin graduate student Maham Khan studies data science and co-founded an AI startup that seeks to address discrimination in the workplace. Khan said that when hiring people to join her company, it was important to ensure diversity in her own team so they wouldn’t be producing biased algorithms.

“We were very conscious that having that mission that having people who understand the risk [of AI] and understand it [and] have lived experiences that would influence the work that we do,” Khan said. “We were very conscious of that, and asking people to sort of describe, [and] reflect on how their experiences would color any tech that they build.”

West added that she doesn’t have an entirely pessimistic view of AI, but that it should be heavily examined to ensure it’s not discriminatory. The authors came to a similar conclusion in the study, writing that transparency and rigorous testing are essential before deploying AI systems on the public.

“AI is an incredibly powerful tool,” West said. “As with any powerful tool, it’s important that we be able to apply scrutiny and understand the ways in which they’re used and embedded in the world around us.”

Email Akiva Thalheim at [email protected].