Artificial intelligence may make your smartphone more vulnerable to hacks through fingerprint identification, according to research conducted by a team of faculty and students at the Tandon School of Engineering.

The researchers found that machine learning can create what they call DeepMasterPrints, or fingerprints created through deep learning that are likely to match with a large number of other fingerprints and can thus gain unauthorized entry to phones or other devices with fingerprint recognition security systems.

Julian Togelius, associate professor of Computer Science and Engineering, was one of the co-authors on the paper, entitled “DeepMasterPrints: Generating MasterPrints for Dictionary Attacks via Latent Variable Evolution,” which was presented at a conference last month.

“Just like master keys are keys that you can open any lock with, we wanted fingerprints that could be masterprints,” Togelius said. “It comes from a casual observation that some people’s fingerprints just seem to be better at unlocking devices than others. So the hypothesis was that maybe we can find some fingerprints that are very good at spoofing, at faking being recognized.”

For convenience, the fingerprint sensors in most smart phones take partial prints instead of full prints. Full prints would require that users place a finger on the biometric sensor the same way each time they try to log in to their device. Full prints would also require larger biometric sensors than currently exist on most smartphones, which would almost certainly increase the cost of the device.

Previous research had created MasterPrints, which showed minutiae points, the key features of fingerprints that are used to match prints in order to log a person into devices with fingerprint recognition abilities. In the latest paper, lead author Philip Bontrager, a doctoral candidate in Computer Science and Engineering, and his team took the research a step further: They created complete, natural-looking images of the fingerprints that maximize the number of matches to other fingerprints, all with the help of machine learning.

These images, the DeepMasterPrints, can then be used to conduct dictionary attacks against fingerprint verification systems, getting past them by statistical chance.

“When Yahoo had all those passwords leaked, for example, if you had a dictionary of the most common passwords, you could get a lot of people’s passwords in that data set, even if you couldn’t get everybody’s, just by using the most common password,” Bontrager said. “This is taking the same idea as through the fingerprint. The algorithm can find the most common features that are shared among a lot of people’s fingerprints. So this attack that we’re doing, the Deep MasterPrint attack, can be considered a type of dictionary attack.”

Although previous research had also already established that devices could be susceptible to a MasterPrint attack, the DeepMasterPrints that Bontrager’s team evolved had a higher success rate in their dictionary attacks.

The DeepMasterPrints were evolved at three different security levels, as determined by a false match rate, which represents how often a print tricked the fingerprint recognition system to be identified as a match. At an FMR of 0.1 percent, the median security level, 23 percent of the DeepMasterPrints were successful at spoofing, or being recognized as a match for an authorized fingerprint when they are not. Though this amounts to barely more than one false positive in every five identifications, biometric verification systems usually have an error rate of less than one in one thousand.

“Partial fingerprints are perhaps not as secure as people have thought them to be because we have found an effective way of attacking them using machine learning methods,” Togelius said.

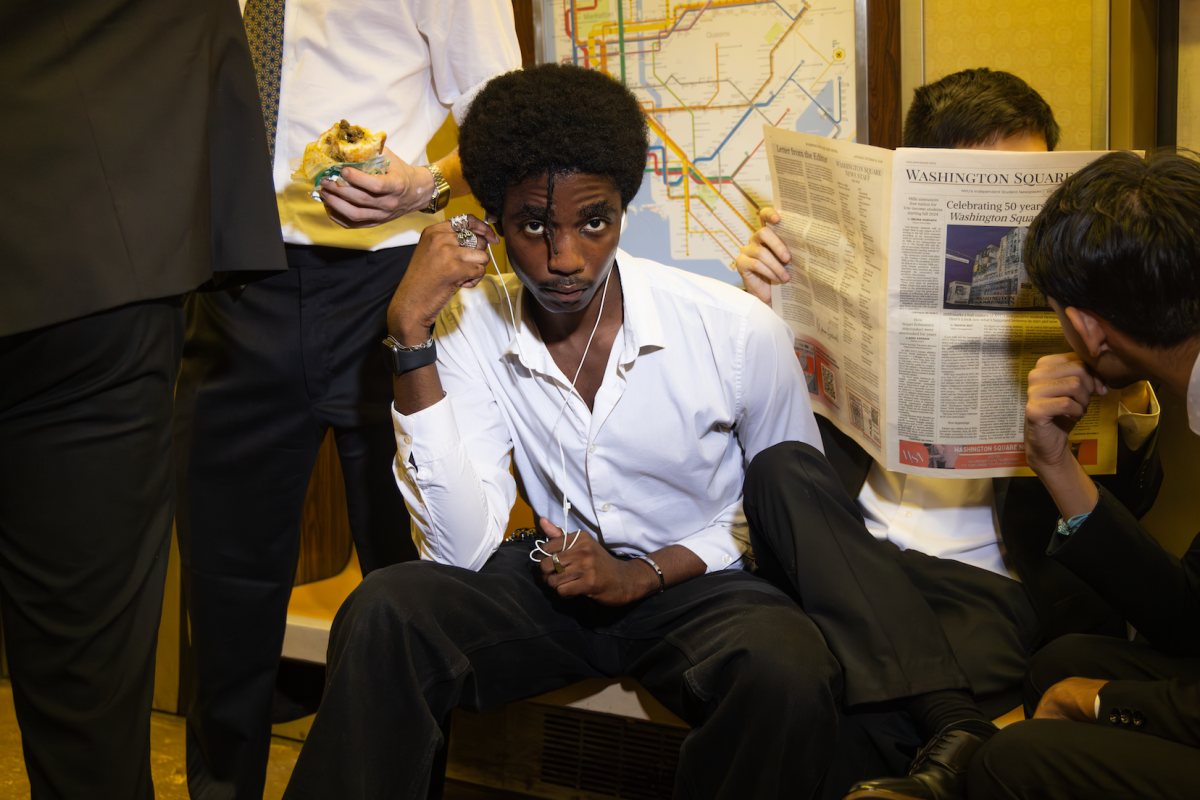

For LS first-year Bry LeBerthon, the accuracy of her phone’s fingerprint authentication system has always been in question.

“I’ve never really trusted technology such as fingerprint recognition or face scanning,” LeBerthon said. “After all, unlike traditional passcodes or security questions, sometimes it doesn’t even let me get into my own phone. How could I trust it to be infallible?”

Though the Tandon researchers’ results raise serious doubts about the quality of biometric identification systems, Bontrager says consumers can still take precautions to avoid being victims of false identification and the hacking that can come with it.

“If you have a phone and it has a very tiny fingerprint sensor, say on the power button on the side, that’s not nearly as secure as the larger fingerprint sensor on the back,” Bontrager said. “And even then, the resolution of the sensor can make a big difference. If you’re security-conscious, be aware that they’re not all the same across all mobile devices.”

Before phones even reach consumers, steps can be taken in manufacturing to increase security.

“For people who are putting these systems into phones, they should be aware of this sort of attack, that when they’re designing their algorithm, they need to now test against AI, synthetic fingerprint types of attacks,” Bontrager said. “It’s important to use larger fingerprint sensors where possible and ideally even be able to do more testing that the fingerprints that they’re testing actually belong to a real finger and a real person.”

A version of this article appeared in the Monday, Nov. 26 print edition. Email Sarah Jackson at s[email protected].