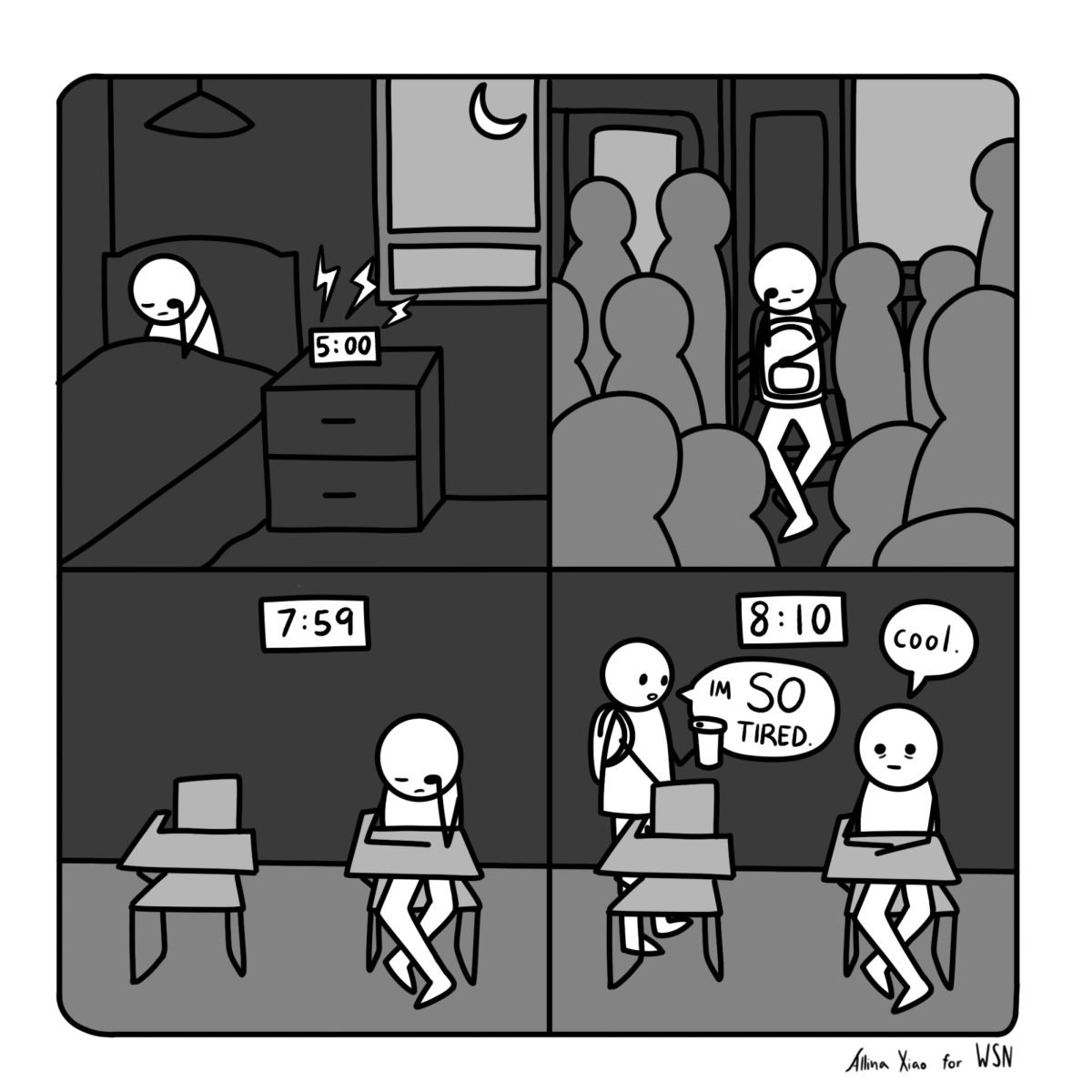

It is an early morning of the just-arrived winter. People I can see on the street from my window wear heavy coats, but it’s unclear how cold it is. I can open the window and let my natural skin sensors grab an approximate measurement, but I realize a much more accurate value can be obtained by pressing a button. “Siri, what’s the temperature outside?” I ask, with a Brazilian accent that most Americans think is Russian. “Brr! It is 32 degrees outside,” answers the piece of rectangular glass I hold. It is a female voice, with an accent of her own. Artificial. That’s probably how I would describe it.

The application, whose name is an acronym for Speech Integration and Recognition Interface, has encountered a wave of sarcastic, philosophical, flirtatious and mundane questions, since it was made available to certain Apple iOS devices in October 2011. Countless jokes featuring Siri made their way through the nodes of the social-media graph, and books about her witty personality have been printed. But if you could take Siri in a trip through time to when your grandmother was 10 (fear not, the time travel paradox involves your grandfather), she would definitely qualify your talking device as “magic.” Perhaps she would even call Siri intelligent.

The question of whether or not Siri is intelligent may be difficult to answer; indeed, according to the definition she grabs from Wolfram Alpha when asked, there is no consensus about what it means to be intelligent — nor about what “meaning” means, as a matter of fact, but enough about metalinguistics. Below, I’ll discuss intelligence. But first, come back from your time travel and book a trip into your brain. This one is easier: simply think of your grandmother.

By doing so, a specific area in the back of your brain, responsible for face recognition, activates. Moreover, it has been suggested that a single neuron fires when you think of her. It’s the “grandmother neuron” and, as the hypothesis goes, there’s one for any particular object you are able to identify. While the existence of such particular neurons is pure conjecture, at least two things about the architecture of the visual cortex have been deduced. One, functional specialization — there are areas designated to recognize

specific categories of objects, such as faces and places. Two, hierarchical processing — visual information is analyzed in layers, with the level of abstraction increasing as the signal travels deeper in the architecture.

Computational implementations of so-called deep learning algorithms have been around for decades, but they were usually outperformed by shallow architectures. Since 2006, new techniques have been discovered for training deep architectures, and substantial improvements happened in algorithms aimed to tasks that are easily performed by humans, like recognizing objects, voice, faces and handwritten digits.

Siri uses deep learning for speech recognition, and in Google’s Street View for the identification of specific addresses. In 2011, an implementation running in 1,000 computers was able to identify objects from 20,000 different categories with record — though still poor — accuracy.

These achievements rekindled popular interest in Artificial Intelligence. Well, not as popular as vampire literature, but definitely among computer scientists. While the technological improvements are substantial, especially when compared to what happened, or didn’t happen, in previous decades, we are closer than ever to achieving AI resembling a human’s intelligence.

This technology is astounding, but we are still far from the proper implementation. As AI researcher Andrew Ng pointed out, “My gut feeling is that we still don’t quite have the right algorithm yet.”

The reason Ng feels this way is that these algorithms are still, in general, severely outperformed by humans. You can recognize your grandmother, for instance, just with a quick glance, by the way she walks. Computers can barely detect and recognize faces from a head-on view. Similar issues exist for recognizing songs, identifying objects in pictures and movies and a wide range of other tasks.

Whether comparison with human performance as a good criterion for intelligence is debatable. But I’ll leave that discussion for part two.

Marcelo Cicconet is a contributing columnist. Email him at [email protected].