Face detection, which consists of finding the approximate center points of faces in a digital image, is one of the most notable problems in computer vision, a field of research that deals with image understanding. Considerable progress was obtained in the first decade of this century, and, these days, algorithms for it are widely available in photo-related devices and software applications.

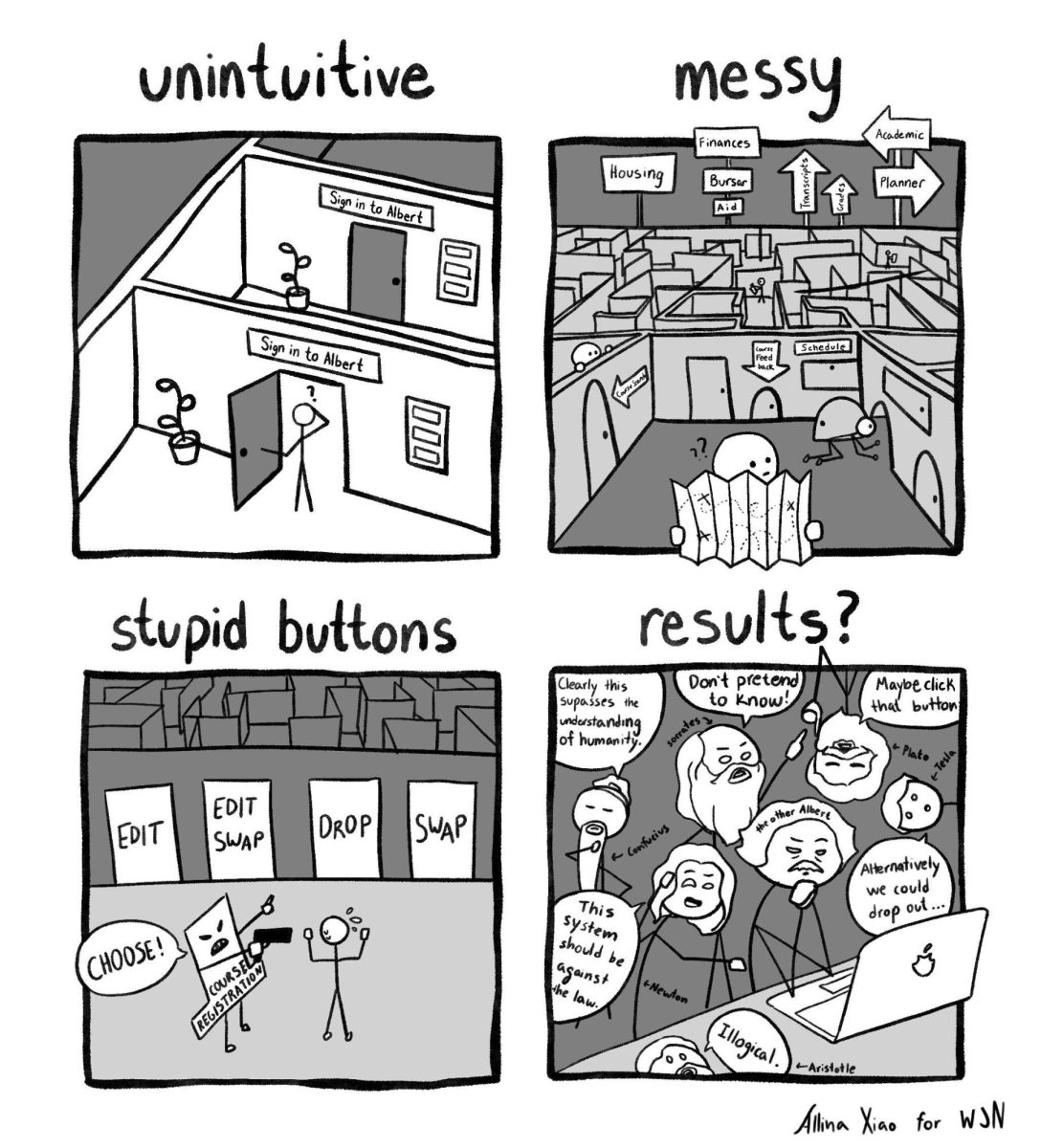

Let us suppose that you find yourself interested in learning about the state-of-the-art advances in face detection. After a quick look at the Wikipedia article for it, whose text is lacking in objectivity and credibility, you might decide to ask for some help from Google Scholar or Microsoft Academic Search. The first will get you over 2.8 million results. The second, about 3,800 publications. Now, in order to avoid panic at seeing those numbers and losing interest, you hope that there are better ways of narrowing your search.

Incidentally, there are. You can restrict your search on Google Scholar for results that were published only this year: 45,700. “Keep calm and carry on,” the popular Internet meme reminds you. Try excluding patents and citations. 39,600 results. Sigh. Let us see what Microsoft Academic Search has to offer: a list of 598 conferences and 231 journals. “Not so bad,” you think. After all, they can be sorted by several different criteria. You search for “top conferences in computer vision” on Google and get as the first result the Microsoft Academic Search link for a list of conferences topped by the Computer Vision and Pattern Recognition. There are 78 listed publications related to face detection in this conference alone since 2012. Consider that any publication has, in general, at least six pages and do the math.

The flooding of academic articles is not a problem per se. Except in rare counterexamples suitable for philosophical discussions, when it comes to knowledge, the more, the better.

The issue is quality, of course. Is it too easy to publish? Not really. Everyone who ever tried to publish something, even in non-mainstream venues, knows that reviewers are not the kindest of people. In fact, gratuitously hostile reviews are not uncommon. Yet the best scientific works in any topic are obscured by sketches of ideas with only potential usefulness, tedious variations of methods that outperform previous versions by half a percent and other findings of dubious significance. Even if low quality works pass the thin review filter, the pressure they exert should be very high.

To mention two numbers, in the United States about 20,000 doctoral dissertations were produced in 2009. Considering the subset of biological sciences, by 2006 only 15 percent were in tenure positions six years after graduating. Most of them end up as post-docs, until they publish enough to get onto tenure track, or go into the industry to steal jobs at which undergrads would do just fine.

This overflow of academic articles makes research very difficult while diluting the impact of existing articles.

A version of this article appeared in the Wednesday, Dec. 5 print edition. Marcelo Cicconet is a contributing columnist. Email him at [email protected].