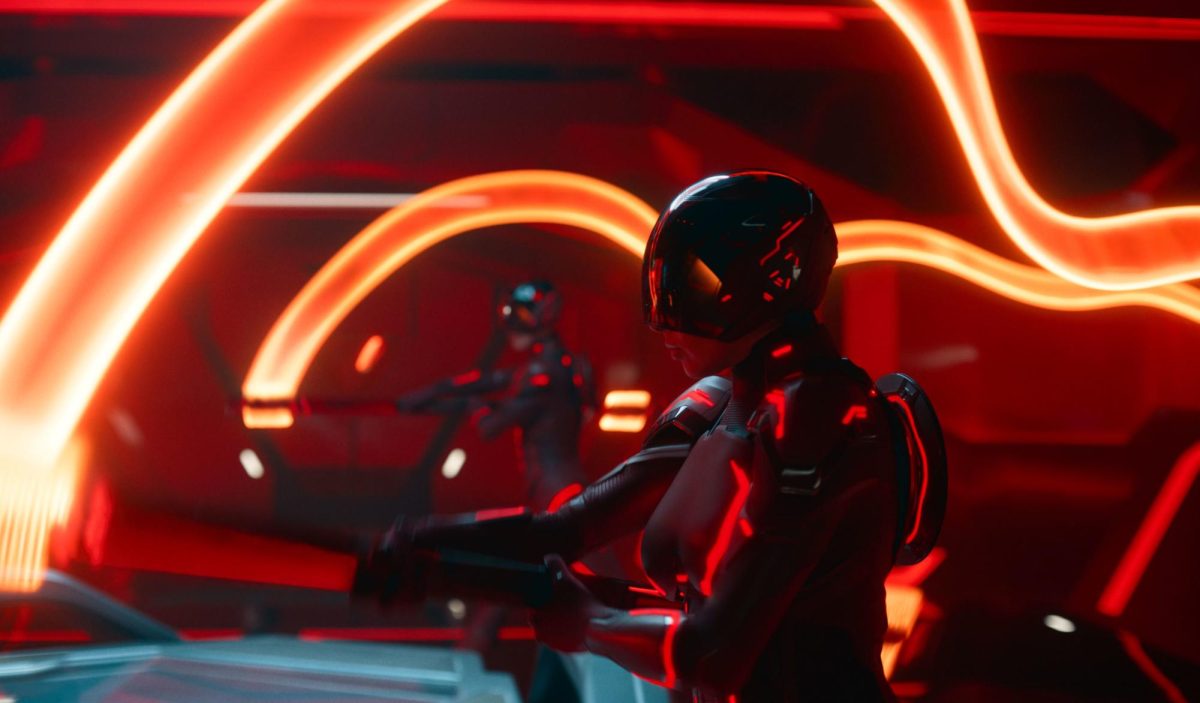

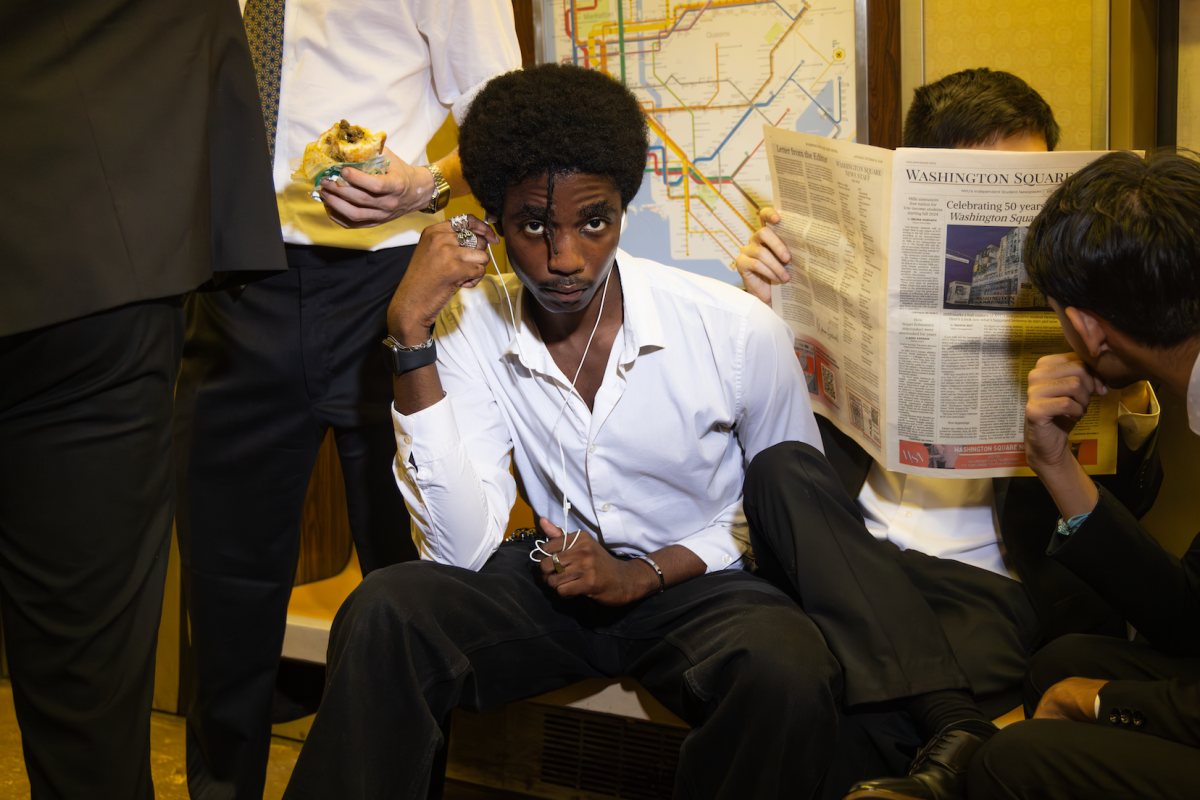

Laughter rippled through NYU Law School’s Greenberg Lounge Monday morning after the founder and CEO of DeepTrust Alliance, a coalition to fight digital disinformation — Kathryn Harrison — played a video of actor Jordan Peele using deepfake technology to imitate President Obama.

Deepfakes are fabricated videos made to appear real using artificial intelligence. In some cases, the technology realistically imposes a face and voice over those of another individual.

The technology poses implications such as harassment, the spread of disinformation, manipulation of the stock market, theft and fear-mongering, Harrison said.

Harrison and other professionals spoke at Vanderbilt Hall this Monday at an NYU Center for Cybersecurity and the NYU Journal of Legislation & Public Policy conference to spread awareness about this deceptive technology, and to look at technological, legal and practical ways to combat the deception.

The professionals consisted of journalism, legal and cybersecurity experts who combat troubles posed by the rapidly developing technology in different ways.

The tone of the room shifted to silence as Harrison continued her keynote speech to discuss how the technology was used to harass Rana Ayyub — an Indian journalist who was critical of Prime Minister Narendra Modi — by putting her face into pornographic material.

“Imagine if this was your teenage daughter, who said the wrong thing to the wrong person at school,” Harrison said.

Distinguished Fellow at the NYU Center for Cybersecurity Judi Germano said the solution for combatting deepfakes is two-fold.

“There is a lot of work to be done to confront the deepfakes problem,” Germano told WSN. “In addition to technological solutions, we need policy solutions.”

Germano moderated the event’s first panel, which specifically focused on technology, fake news and detection of deepfakes. She also discussed the role deepfakes play in the spread of disinformation.

Despite how innovative deepfake technology is, experts such as Corin Faife — a journalist specializing in AI and disinformation — consider them to be a new form of an old problem.

“One of the important things for deepfakes is to put it into context of this broader problem of disinformation that we have, and to understand that that is an ecosystemic problem,” Faife explained to WSN in an interview. “There are multiple different contributing factors, and [the technological solutions] are no good if people won’t accept that a certain video is false or manipulated because of their preexisting beliefs.”

This line of thought is why some are hesitant to push through legislature regarding deep fake technology. The director of the American Civil Liberties Union’s Speech, Privacy and Technology Project, Ben Wizner, took this position during the second panel on how legislature should evolve to deal with deepfakes.

Since deepfakes are a means to commit illegal acts, Rob Volkert, VP of Threat Investigations at Nisos, understands his fellow panelist’s mindset. Volkert said he also struggles with pinpointing who to accuse.

“The responsibility is on the user, not on the platform,” Volkert told WSN in an interview after explaining how the market for deepfake software does not need to hide in the dark web.

Deepfake technology is an ominous cloud approaching the presidential election and that is why it was an appropriate topic for this event, Journal of Legislation and Public Policy board member Lisa Femia said.

Facebook’s Cybersecurity Policy Lead Saleela Salahuddin, who spoke during the conference, raised a point about public trust during elections.

“There should not be a level of distrust that we therefore trust nothing,” Salahuddin said to the audience.

Email Andrew Califf at [email protected].