Just last week, DeepSeek, the latest competitor to ChatGPT, burst onto the scene, immediately causing an uproar among U.S. tech companies and the recently inaugurated Trump administration. DeepSeek — which may appear to the average consumer as yet another artificial intelligence chatbot — has sent ripples through Silicon Valley and the stock market thanks to its open-source nature, sparking a conversation on the ethics of AI development.

DeepSeek, unlike most AI models such as ChatGPT Pro, is completely free to use, but that’s not to say there aren’t any connections between the rival chatbots. The program was allegedly a product of distillation, a recently controversial method of language model training, in which a new AI model is trained by having it ask an existing “teacher” model — in this case, ChatGPT— a plethora of questions and learning from the interactions.

The accusations of distillation against DeepSeek have drawn perhaps the strongest focus from the media and tech industry. It allows AI models to be trained much faster and at a fraction of the usual development cost but is directly reliant on the quality of the teacher model. This raises a significant question regarding the future of AI in the tech industry: Why would large companies invest hundreds of millions in research and development if rivals can create almost identical models at far cheaper cost?

Accusations of distillation test the legal boundaries of AI and may come to define how we approach private intellectual property regulations in the age of AI and big data. We may be entering a tech landscape where replication outpaces original development, and now the question becomes whether this will be a period of unprecedented innovation or technological stagnation.

OpenAI’s recent investments, including its $500 billion partnership with SoftBank and Oracle, now seem less worthwhile if its AI infrastructure continues to appear exorbitantly and disproportionately overpriced. DeepSeek was roughly 16 to 33 times cheaper to train than ChatGPT. This can bring up larger issues that permeate the tech industry as glamorized corporate espionage becomes the norm amongst a sea of carrion-feasting AI companies.

Other venture capitalists in the industry argue that these outcomes were expected in a maturing, saturated market. Dario Amodei, CEO of AI company Anthropic, argued that the reduced costs were predictable, with DeepSeek simply serving as a data point on the expected cost curve of AI models. Innovation being replicated is a familiar concept in the 21st century, like with the smartphone market two decades ago when Samsung and Google produced phones rivaling the touch-screen technology of the iPhone.

DeepSeek has also raised concerns about being restricted and heavily moderated by the Chinese Communist Party. When asked questions that relate to the Tiananmen Square Massacre or protests in Hong Kong that took place in 2019, the chatbot begins to generate a response, but quickly erases the output and says: “I’m not sure how to approach this type of question” or “Let’s talk about something else!” With DeepSeek becoming such a large player in the AI industry at a global level, it is imperative to recognize the implications of this censorship on free speech and the shaping of a global opinion. Because the technology was developed and based in China, its operations are subject to the jurisdiction of the CCP.

Security concerns have also proven to be a predominant source of anxiety among users when questioning the ethical implications of AI. With the handling of American data being currently discussed in the White House in relation to TikTok, DeepSeek must also be looked into. A key and concerning difference between the two companies is that while TikTok stores user data in the United States, Malaysia and Singapore, DeepSeek stores its data in servers located in China. Recently, a network security company found an exposed DeepSeek database leaking sensitive user information, such as their chat history. Because of the database’s cyber insecurity, hackers could not only access user data but also take broader control over the database itself.

If a young hedge fund analyst can essentially bootleg the most widespread large language model there is to create a cheaper, cost-effective and open-source competitor, then what’s to stop others from piggybacking off others? We should be concerned that AI innovation could become an uncontrollable spiral of imitation. Without setting guardrails, we may find ourselves unable to distinguish between human-made models and machine mimicry.

Instead of looking at DeepSeek as just another chatbot, we should recognize it as a sign that the AI industry is accelerating uncontrollably. DeepSeek should serve as a signpost for the government over AI development and usage. The path for development in AI must be a careful balance between innovation and security. Rather than focusing on producing models rapidly, governments must set controls that ensure that AI models have privacy policies that protect user data in a robust manner. The AI industry needs to be properly watched and regulated before its boon turns into a bane. Otherwise, we risk AI becoming an unstoppable force of self-replicating mediocrity rather than a tool defined by and promoting innovation.

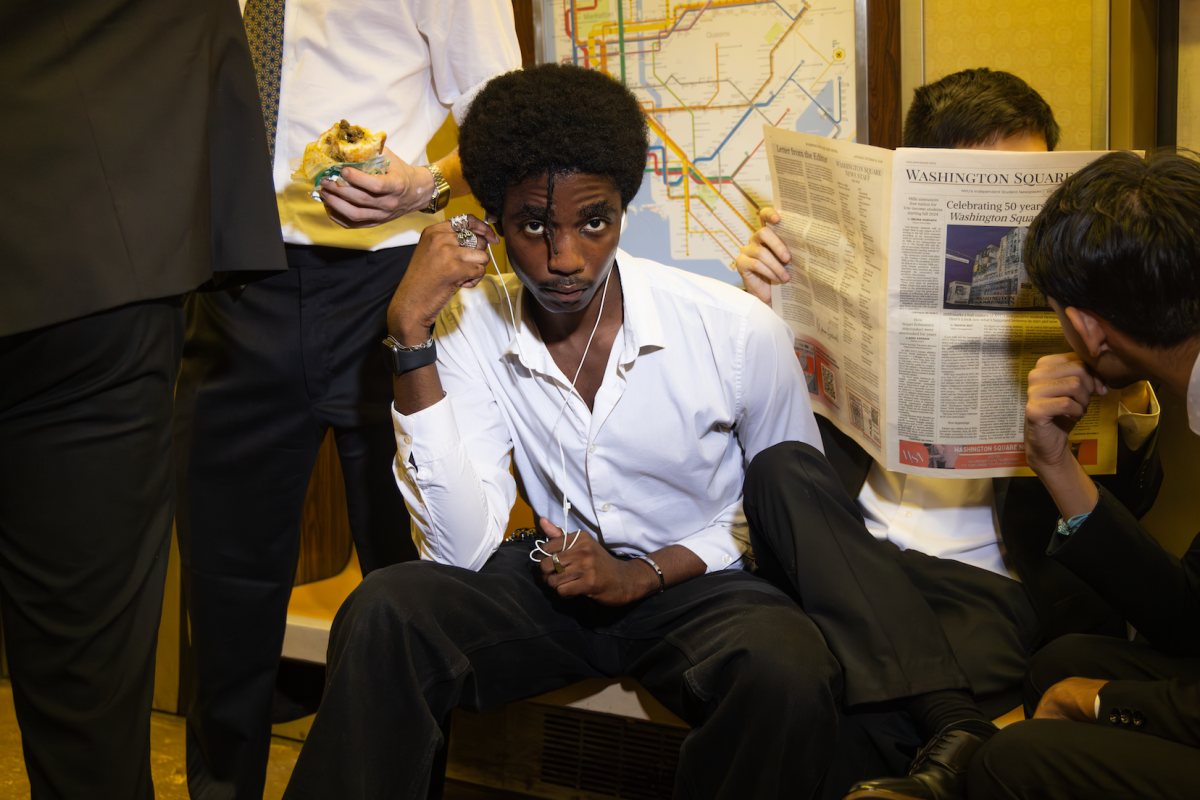

WSN’s Opinion section strives to publish ideas worth discussing. The views presented in the Opinion section are solely the views of the writer.

Contact Shanay Tolat at [email protected].