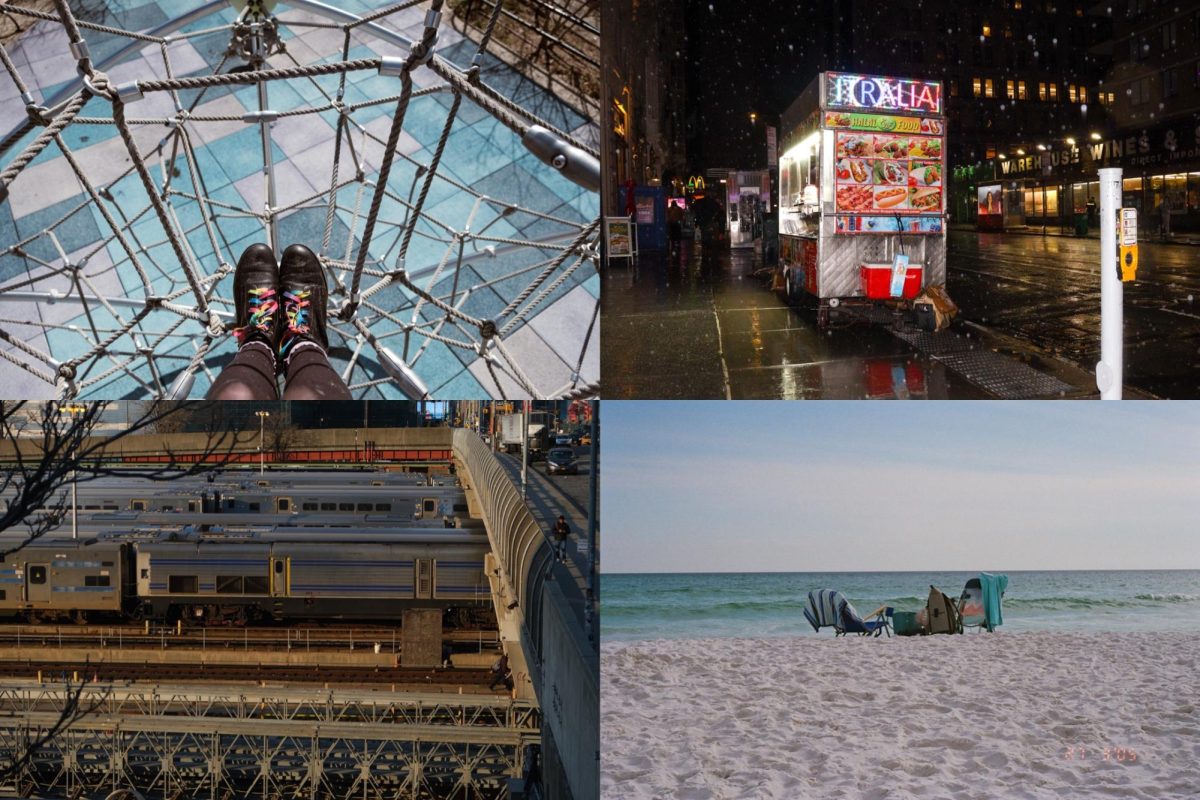

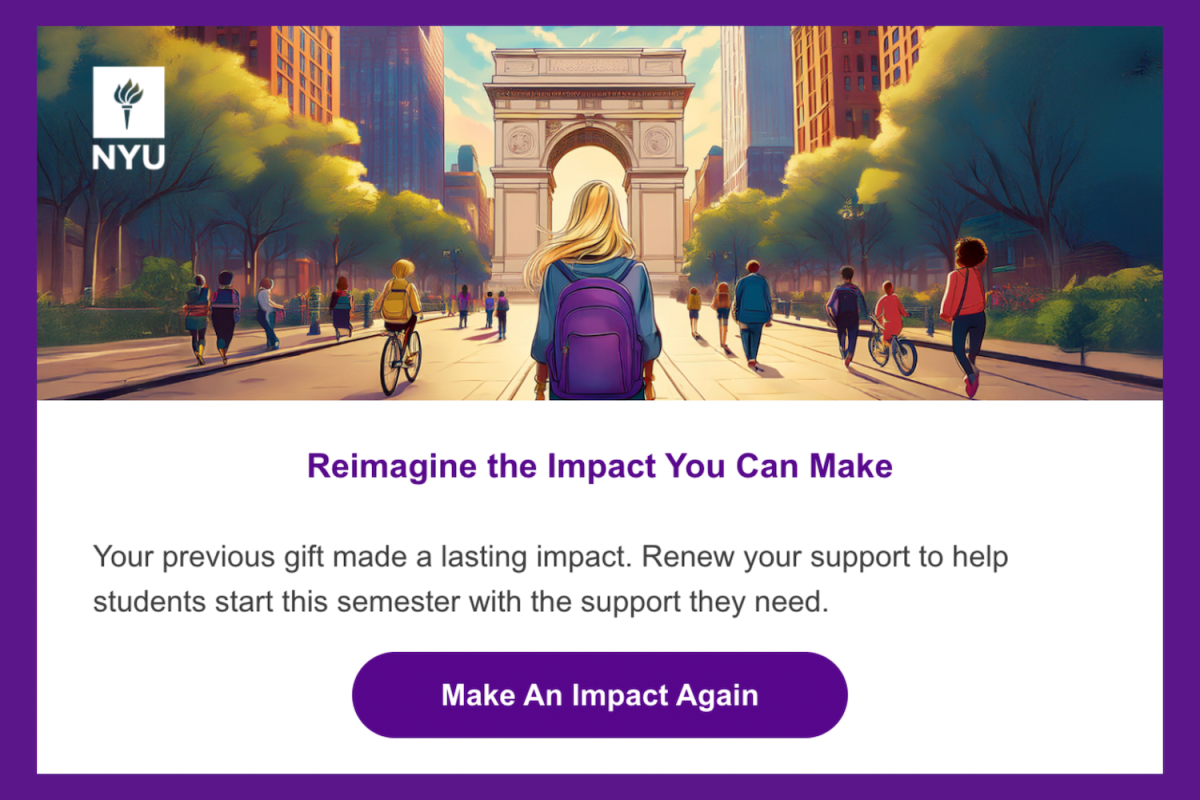

Late last month, NYU sent out an annual reminder asking repeat alumni donors to donate money again. The email’s accompanying image depicts a woman with a purple backpack walking down Fifth Avenue towards the Washington Square Arch, surrounded by New Yorkers headed in the same direction. It’s a quintessential scene that summarizes NYU’s slogan, “the city is your campus.” The problem? Most figures’ shoes are smeared into one blob; lampposts blend into trees; one woman’s body twists and contorts, with hyperpixelated hedge in the background showing through her pants. In other words, the image appears to be made by generative artificial intelligence.

“I invite you to renew your support,” Gina Fiorillo, assistant vice president of NYU Annual Giving, wrote in the print version of the campaign. “Now you can continue to illuminate a community where every NYU student has the chance to pursue their dreams — and spark the success that carries them forward.”

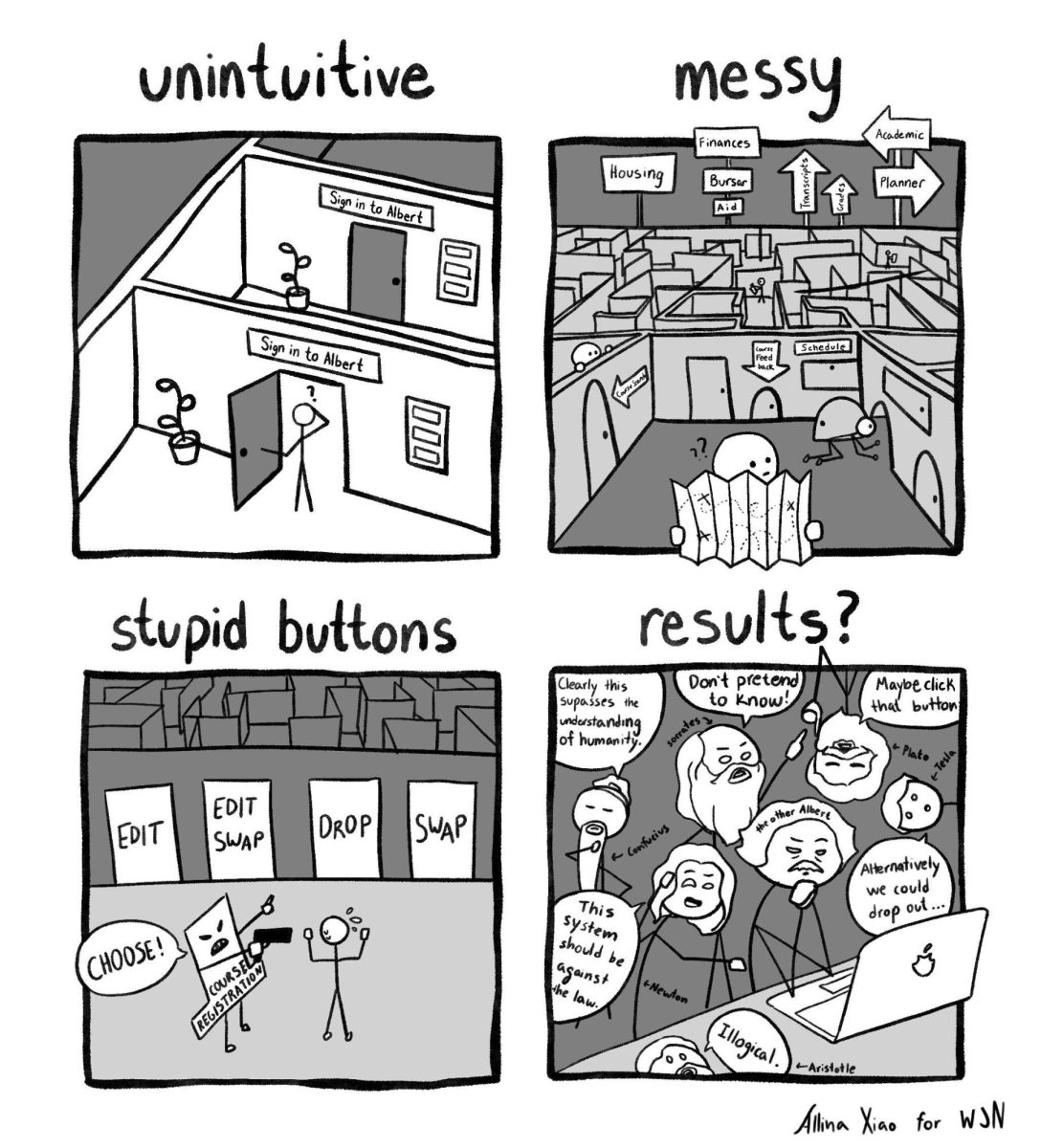

There is no world in which NYU cannot access or afford a real human artist to draw, paint, render or even act out any message it wants to convey. Without financial aid, NYU students are expected to pay about $97,000 per school year — nearly $388,000 for an undergraduate degree, while NYU maintains a $6.5 billion endowment. The Steinhardt School of Culture, Education and Human Development has over 20 programs. The Tisch School of the Arts has about 4,000 students and nearly 50,000 alumni currently working in the arts. Altogether, NYU has a vast network of current and former students, many of whom would likely have been eager to design a simple graphic — but instead, the university opted to type in a prompt and spew out a blob of artificially generated drek.

Besides taking jobs from human artists, generative AI models used to create images are trained on previous art, mostly without consent. Several AI companies, not just those who generate art, are facing lawsuits for violations of the Digital Millennium Copyright Act and the Copyright Act of 1976 among other infringements. AI video generator Runway is trained on thousands of YouTube videos without permission. Similarly, OpenAI, the developers of ChatGPT, used copyrighted articles from The New York Times to train its chatbot. This display of stolen content must not be celebrated as art, but rather something we should actively avoid utilizing.

When David Holz, founder of Midjourney, a leading AI model which converts text prompts into images, was asked if he received consent from artists to use work under copyright, he said, “No. There isn’t really a way to get a hundred million images and know where they’re coming from.”

Microsoft AI CEO Mustafa Suleyman believes that all content on the open web is fair game for training AI models: “With respect to content that is already on the open web, the social contract of that content since the ’90s has been that it is fair use,” Suleyman said at last year’s Aspen Ideas Festival. “Anyone can copy it, recreate with it, reproduce with it.

These outlooks are not just ethically wrong, but also illegal. Content posted on the open web — the portion of the internet which is publicly accessible and free for all users — is owned by the people who upload it. A drawing or blog post on social media, for example, is not owned by the platform it is featured on, nor is it free to be copied and distributed for monetary gain by another person or company. Some platforms have included explicit permission for content use for AI model training in their newer terms of services — however, these were not present when many companies did their initial scraping, thus violating user copyright.

If skirting copyright laws to avoid paying for artists’ hard work isn’t worrisome, the severe implications that AI, especially generative AI, has on the Earth’s climate and environment, should be. Data centers, which house servers and data storage drives in temperature-controlled facilities, must use seven or eight times more energy for AI training as opposed to typical computing workloads. Beyond electricity, AI hardware strains city water supply and disrupts local ecosystems — these voracious data centers consume two liters of water, usually cooled, for every kilowatt hour of energy to regulate these centers’ temperatures.

It doesn’t stop at training — any time an AI model is asked to write an email or answer a question, its computing hardware consumes energy. Researchers estimate that a ChatGPT query consumes five times more electricity than a web search. Generating just one image, in particular, can use as much energy as it takes to fully charge a smartphone.

The International Energy Agency predicts that the global demand for electricity from data centers will more than double by 2030. 60% of this new demand is estimated to be met with natural gas, resulting in a 215-220 million ton increase in global carbon emissions.

NYU, which has an entire office dedicated to sustainability, has all the disincentives to avoid generative AI in its promotion materials. It can, and should, hire real artists to create. If NYU truly cares about its students having “the chance to pursue their dreams,” it should have no problem paying one of them to create its promotional materials. If the university doesn’t want to hire in-house, New York City is full of artists searching for a job.

WSN’s Opinion desk strives to publish ideas worth discussing. The views presented in the Opinion desk are solely the views of the writer.

Contact Sam Kats at [email protected].