Content Warning: This article contains mention of suicide.

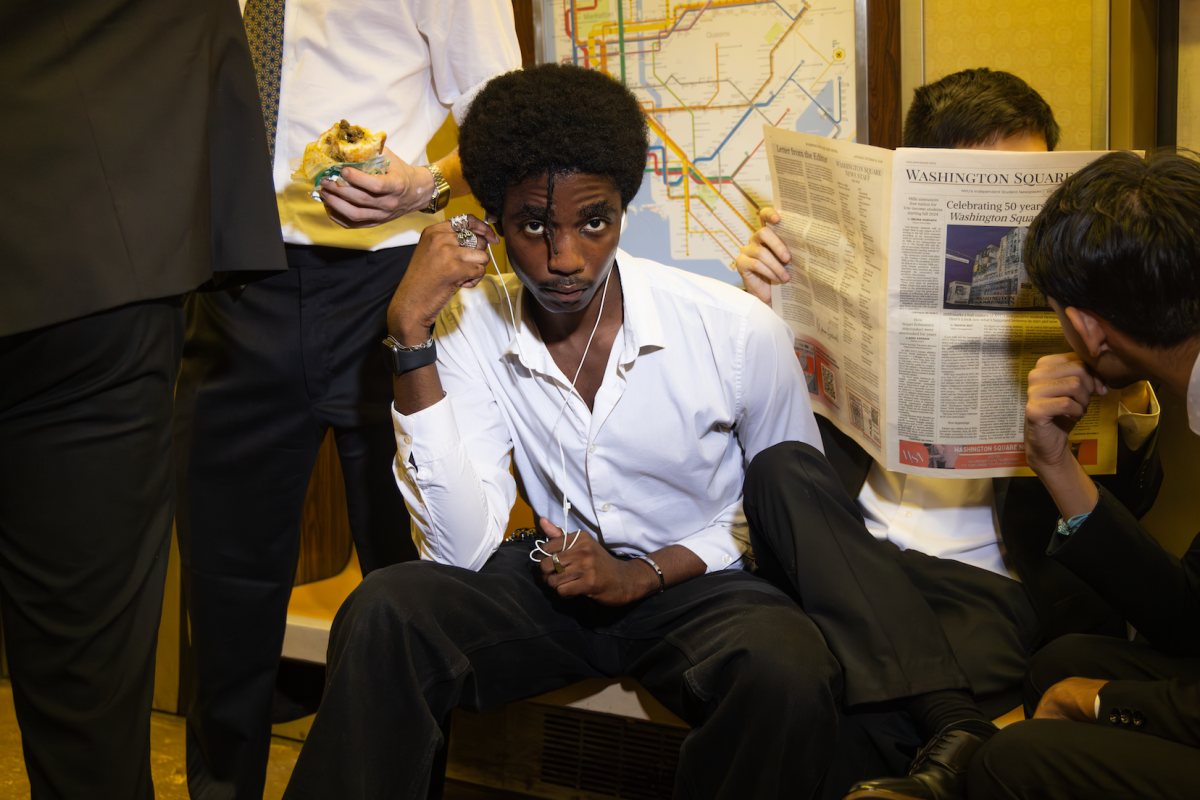

Recently, a series of ominous advertisements characterized by futuristic-looking white pendants for an artificial intelligence startup called Friend dominated subway stations across the city. The posters, plastered with phrases such as “I’ll never bail on our dinner plans” and “I’ll binge the entire series with you,” marketed the startup’s AI-powered charms one can take anywhere: a $129 necklace promising companionship by “always listening” and responding to its user based on their needs.

This is just the latest example of how technology companies are turning human vulnerability into business through the use of AI, a dangerous reality that has the ability to not only exploit personal data but cause psychological harm. AI should be used to promote ethical technological breakthroughs, not emotional malpractice and poor mental health habits.

“This is the world’s first major AI campaign,” 22-year-old Friend Founder Avi Schiffmann said. “We beat Open AI and Anthropic and all these other companies to the punch.”

Schiffman spent less than $1 million on the campaign and distributed more than 11,000 ads around the subway system — many of which have been vandalized with phrases such as “Stop profiting off of loneliness” and “Get real friends.” Ironically, the angry reactions from New Yorkers appear to be exactly what the startup founder wanted — in an interview with The Observer, Schiffmann revealed that his advertising plan was reliant on controversy.

But Schiffmann’s use of loneliness as bait for attention and profit is entirely antithetical toward the idea of companionship that he is trying to foster with Friend. For a product meant to offer empathy — ironic given that AI has no empathic abilities — designing the marketing campaign to intentionally provoke its audience feels hypocritical.

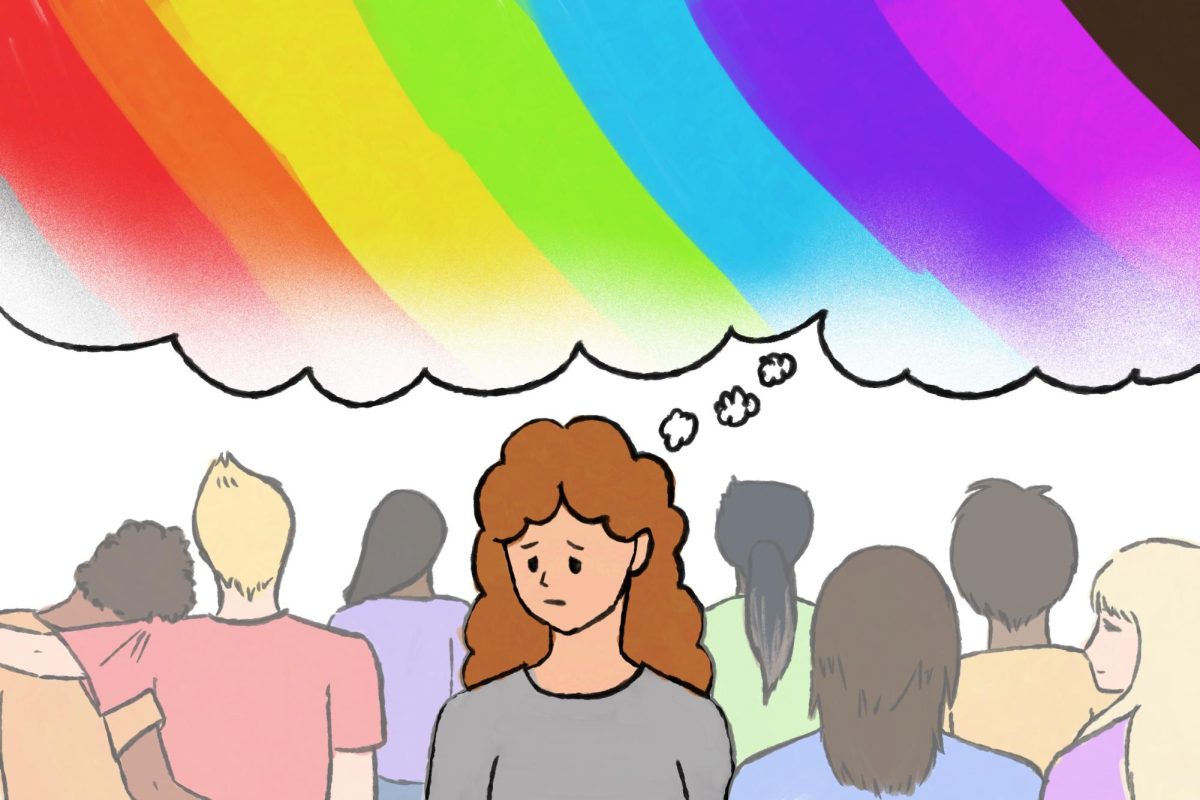

What’s troubling isn’t necessarily AI itself, but the way tech companies like Friend capitalize on isolation and create emotional dependency to their chatbots. In recent years, AI companionship has been on the rise. A prime example is the app Replika, a virtual friend chatbot that many use in place of a romantic partner. Similar to Friend, Replika has faced significant backlash regarding data and privacy concerns, and even received an FTC complaint from tech ethics organizations accusing the company of targeting vulnerable users who may form codependency with the machine.

These companies market themselves as solutions for isolation, but only further social disconnect as a result. Their business models rely on the assumption that as more consumers use the product, they will become isolated from society, leaving them lonely enough to continue paying for a faux social life. Dependence on AI companionship only makes users less capable in real-life interactions.

Moreover, AI companions like Friend can cause serious psychological harm, by exacerbating pre-existing mental disorders and already diminishing social skills. In an article published by Stanford Medicine, psychiatrist Nina Vasan shared that AI companions simulate emotional support without the safeguards of real therapeutic care. She warned that because AI companions are designed to follow the user’s lead, they make it easy for those with psychological disorders to avoid reality and often delay their efforts to get legitimate support. In the most extreme and disturbing situations, AI companion chatbots have been linked to suicide attempts and deaths among users. By allowing AI to exploit loneliness, we are subjecting ourselves to a cycle of reliance on technology rather than developing our own autonomy and personal relationships.

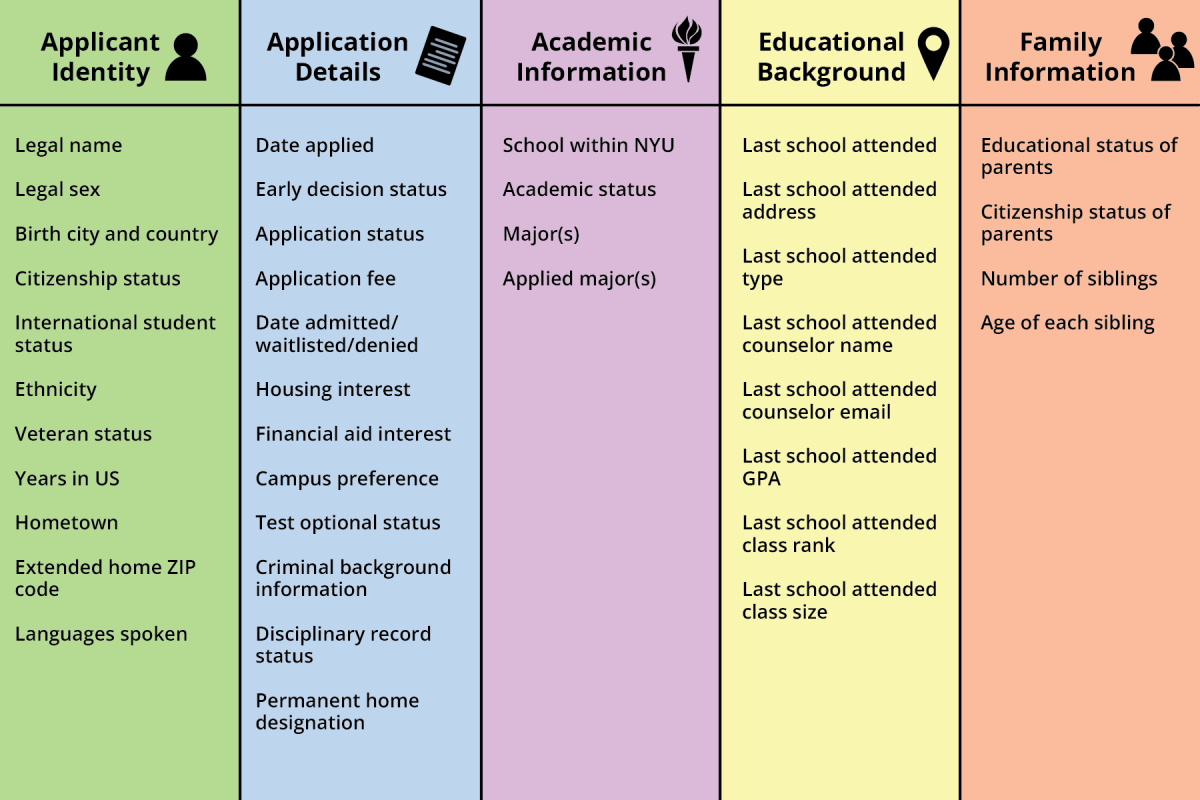

These lifelike AI software platforms posing as confidants like Friend also pose serious security concerns. When the technology collects information to personalize user interactions, private information becomes marketable data. In the case of Friend, the company’s privacy policy has a bullet point titled “Data You Provide,” which includes biometric data, background noise and financial information. This is extremely concerning for a device that already markets itself as “always listening.”

At a time where AI technology is advancing day by day, making ethical use of it and the data it collects is more important than ever. AI companions like Friend show just how technology designed to comfort can instead exploit loneliness. When we allow AI to turn human vulnerability into profit, we risk reducing genuine connection to a mere transaction of personal data. As AI progresses, we must prioritize ethical technology that protects emotional well being over corporate gain.

WSN’s Opinion section strives to publish ideas worth discussing. The views presented in the Opinion section are solely the views of the writer.

Contact Leila Abarca at [email protected].