NYU researchers found they could measure an animal’s consciousness by comparing how similarly it behaves to humans when encountering different circumstances. Detailed in a Feb. 20 report, their findings asserted that more animal-centered research could lead to a new understanding of consciousness in both humans and artificial intelligence.

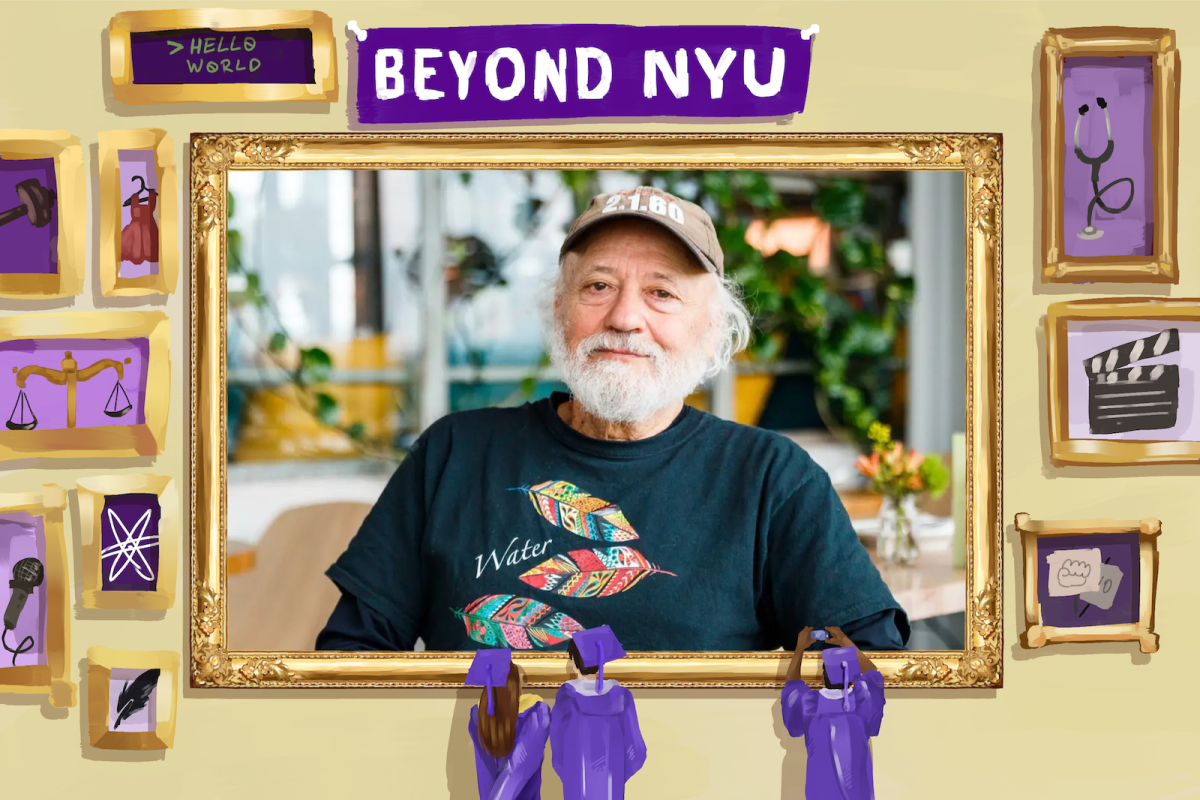

The paper’s authors, environmental studies professor Jeff Sebo, philosophy professor Kristin Andrews and London School of Economics professor Jonathan Birch, focused on how specific indicators of consciousness — such as pain or laughter — presented itself in humans and compared the behavioral and physical reaction to that of another species. In an interview with WSN, Sebo said that because “consciousness” does not have one definition, it is impossible for researchers to confirm that it will look the same in animals as humans.

“If we find those markers in other animals, that is not proof that those animals are conscious,” Sebo said. “But it can still be evidence, and that can still be really useful for making decisions about how to interact with animals when we have uncertainty about their consciousness.”

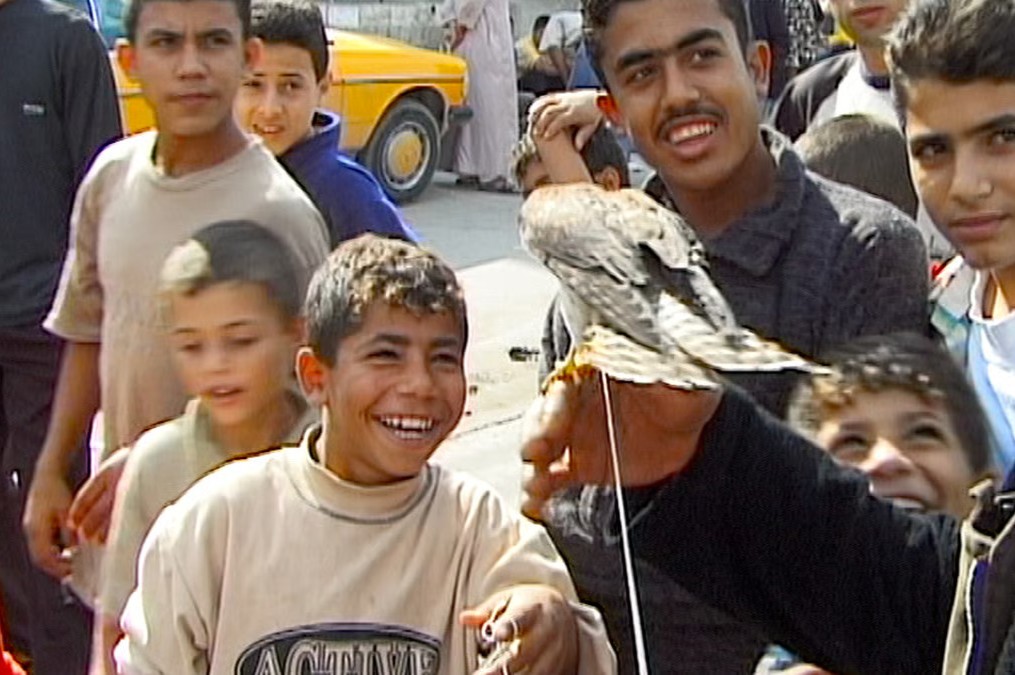

Sebo also said the team has seen clear examples that animals are self-aware — including for non-mammals like snakes and fish. Some animals can recognize wounds on their bodies when looking in a mirror and respond by nursing the injury similarly to people, and others exhibited signs that they could possibly feel joy. However, Sebo explained that in the future the researchers aim to conduct more diverse experiments, because their published report was largely reliant on comparing signals of pain and also excluded some species, like spiders.

Although AI systems cannot currently exhibit signs of consciousness like humans and animals because they do not have similar anatomy or behavioral patterns, Sebo said that the group’s findings still have implications for technology research. He said that it is possible for upcoming AI technology to integrate similar markers of consciousness as humans, so that it could experience elements such as perception, attention and memory independently — rather than just mimic humans’ behavior.

“AI companies have an incentive to race towards the creation of those AI systems because many of the features that are associated with consciousness are also associated with intelligence,” Sebo said. “Even if AI companies are not attempting to build conscious AI systems, they might accidentally build AI systems that have at least a realistic possibility of being conscious given the evidence available to us. That is a remarkable possibility.”

At the start of this academic year, NYU received a $6 million endowment to launch the Center for Mind, Ethics and Policy under Sebo to study the consciousness of animals and AI. The center has hosted numerous events, such as book talks and a two-day conference for experts in environmentalism and animal studies to discuss the legal and political status of nonhumans.

“There are signs that laws are continuing to improve,” Sebo said. “People are opening up to the possibility of consciousness and moral significance in animals.”

Contact Audrey Abrahams at [email protected].