Reproducibility is one of the main pillars of the scientific method. It refers to the degree to which scientists devise experiments and studies that can be repeated by others while obtaining the same results. For instance, if a study proposes a drug for a certain disease, it must be tested many times before the medication can appear in drugstores. Yet lately, there has been alarming news regarding this necessary practice.

In the past few months, a number of articles have discussed the problem of reproducibility in science — a factor which has become less important in the scientific community. Writing for phys.org in September, Fiona Fidler and Ascelin Gordon heralded a “reproducibility crisis.” An article in The Economist seconded this idea and emphasized how such a crisis harms science’s capability to correct itself.

Michael Hiltzik of The Los Angeles Times wrote a similar article at the end of October in which he cited a project from biotechnology firm Amgen, which tried to reproduce 53 important studies on cancer and blood biology. Only six of these studies led to similar results. In another example, a company in Germany found only a quarter of the published research backing its research and development projects could be reproduced. These consequences severely impair the reputation of the scientific method.

The blame for this emerging crisis should be shared between both scientists and the general media. Readers desire novelty, and journalists are eager to cater to them, which leads to science news focusing more on new and exciting discoveries rather than on the important question of how reproducible each study is. Indeed, Hiltzik observed that “researchers are rewarded for splashy findings, not for double-checking accuracy.” The fault within the scientific community is that research quality is measured essentially by the popularity of the study, rather than its reproducibility.

One initiative to solve the problem is to create a reproducibility index attached to journals. In computer science, researcher Jake Vanderplas proposed that the code used to produce the results should be well-documented, well-tested and publicly available. Vanderplas convincingly argues that this “is essential not only to reproducibility in modern scientific research, but to the very progression of research itself.”

Scientific journals should provide tools to easily discern the reproducibility of a study. Accompanying data, software code and reproducibility indexes — in addition to the main manuscript — should all be mandatory. Rather than imposing an extra burden on researchers, these additions would facilitate the validation and comparison of results, speeding up the pace in which important — and popular — discoveries are made.

*An earlier version of this article misspelled reproducibility. WSN regrets this error.

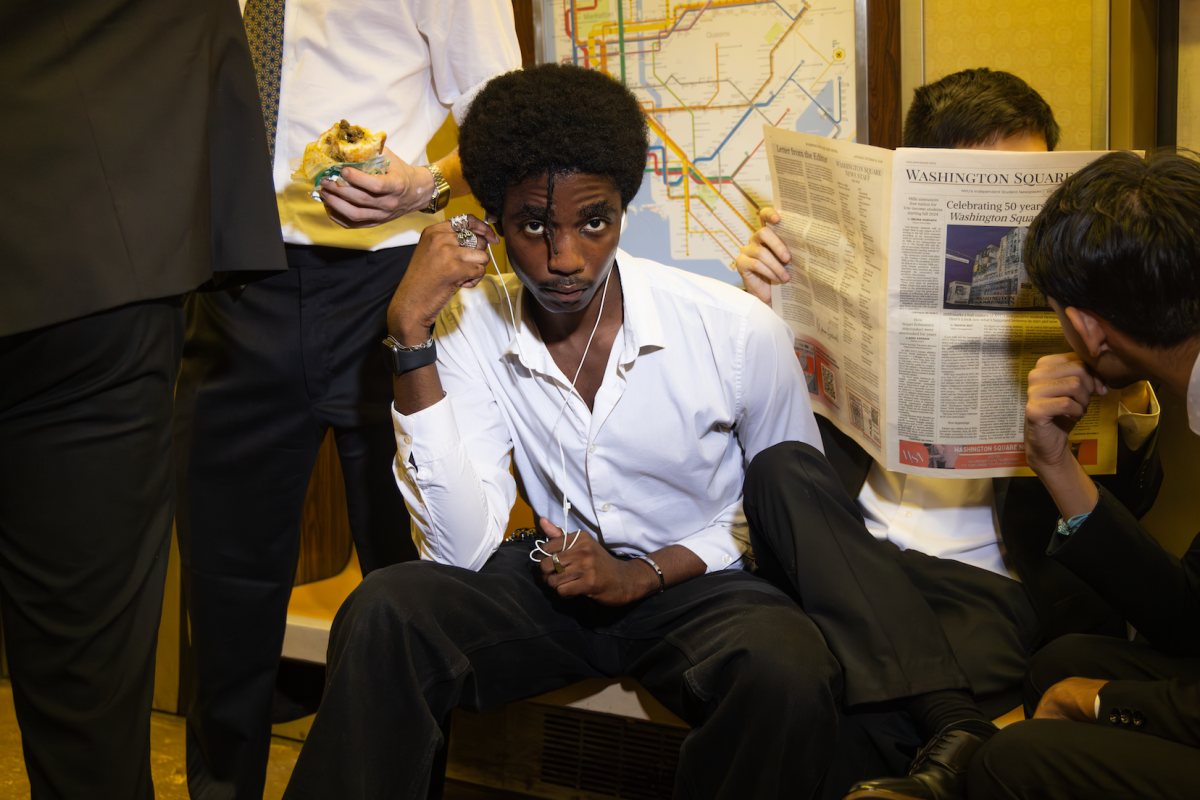

A version of this article appeared in the Tuesday, Dec. 3 print edition. Marcelo Cicconet is a staff columnist. Email him at [email protected].